(Takes a little while loading the images)

e-mail :

This document (Part XXIX Sequel-28) further elaborates on, and prepares for, the analogy between crystals and organisms.

Philosophical Context of the Crystal Analogy (V)

In order to find the analogies that obtain between the Inorganic and the Organic (as such forming a generalized crystal analogy), it is necessary to analyse those general categories that will play a major role in the distinction between the Inorganic and the Organic : Process, Causality, Simultaneous Interdependence, the general natural Dynamical Law, and the category of Dynamical System. All this was done in foregoing documents. Where we studied Dynamical Systems (previous document) we saw that we must supplement our earlier considerations about Causality, on the basis of our findings in Thermodynamics. In the present document we will continue to study the category of Dynamical System, again in connection with Thermodynamics, and further work out the amendments that were necessary with respect to the analysis of Causality.

Sequel to the Categorical Analysis of 'Dynamical System ', and a discussion of Causality in terms of thermodynamics.

Sequel to the discussion of Clock Doubling on [0, 1] (See previous document)

We continue with the analysis of the many possible trajectories that -- one for each case, and determined by probabilities -- can emerge from a minimal area in the phase space of the clock doubling system. The minimal area was -- as an example -- set to be the interval 0.0101 of the 0 to 1 line segment.

Now we're going to subject our unspecifiable number 0.010101---- (which is only specified or defined as to lie in the interval 0.010101) to the clock doubling algorithm (binary point one place to the right, dropping the 1 before the point when it apprears). And we do this as follows : simultaneously with each round of clock doubling our initial number is extended by one digit (to its right-hand side), the value of which, 0 or 1, is randomly chosen (this we do because our number is supposed to be a number that is, from 0.010101 onwards, unspecifiable, that is to say, undefined). And because at the conclusion of each step in the clock doubling process the number loses one digit at its left side, the lengths of the digit strings that successively appear are equal and remain equal througout the resulting sequence. On the other hand, the initial digit string, representing the system's initial condition, becomes longer and longer, that is to say, the initial number more and more develops, and thus becomes more and more accurately defined and known.

So let's go ( The digit that is randomly added is indicated in red ) :

Initial number : 0.010101---- ( = First state)

Second state : 0.101011----

(extended) Initial number : 0.0101011----

Third state : 0.010110----

(extended) Initial number : 0.01010110----

Fourth state : 0.101101----

(extended) Initial number : 0.010101101----

Fifth state : 0.011010----

(extended) Initial number : 0.0101011010----

Sixth state : 0.110101----

(extended) Initial number : 0.01010110101----

Seventh state : 0.101010----

(extended) Initial number : 0.010101101010----

Eight state : 0.010100----

(extended) Initial number : 0.0101011010100----

Ninth state : 0.101000----

(extended) Initial number : 0.01010110101000----

Tenth state : 0.010001----

(extended) Initial number : 0.010101101010001----

Eleventh state : 0.100011----

etcetera.

Let's summarize the sequence of successively appearing states, as established above. At the same time (far right column) we indicate the compartment (L, or R) of the 0 to 1 line segment the particle successively visits.

| First state : | 0.010101---- | L |

| Second state | 0.101011---- | R |

| Third state | 0.010110---- | L |

| Fourth state | 0.101101---- | R |

| Fifth state | 0.011010---- | L |

| Sixth state | 0.110101---- | R |

| Seventh state | 0.101010---- | R |

| Eight state | 0.010100---- | L |

| Ninth state | 0.101000---- | R |

| Tenth state | 0.010001---- | L |

| Eleventh state | 0.100011---- | R |

| etcetera. | etcetera. | etc. |

The above results can be depicted geometrically. See the Figure HERE . In that Figure we see the (ontologically) statistical context (light brown), and within this context we see the trajectory (yellow) of the unspecifiable irrational number 0.010101---- ( The trajectory is given by the evolution of the interval 0.010101 , because initially the starting number was only defined by six digits after the binary point (010101), implying that it could be indicated by an interval only). As was to be expected, the trajectory, emanating from the volume-like initial condition 0.0101 remains within the statistical context. This context itself evolved from the whole volume-like initial condition, that is to say from the whole interval 0.0101 .

Trajectory from 0.010101---- :

0.010101----

0.101011----

0.010110----

0.101101----

0.011010----

Let us now determine the trajectory of another unspecifiable irrational number, emanating from the same initial condition 0.0101 . We will see that although we get a different trajectory, it also remains within the statistical context as evolved from this volume-like initial condition. As this new unspecifiable number we choose 0.010110---- . This is thus a number that is defined only by the first six digits after the binary point. The rest of the digits is not defined. They will be successively added in a random fashion, in the same way as we have done with respect to the number discussed above.

Initial number : 0.010110---- ( = First state)

Second state : 0.101100----

(extended) Initial number : 0.0101100----

Third state : 0.011000----

(extended) Initial number : 0.01011000----

Fourth state : 0.110001----

(extended) Initial number : 0.010110001----

Fifth state : 0.100011----

(extended) Initial number : 0.0101100011----

Sixth state : 0.000110----

(extended) Initial number : 0.01011000110----

Seventh state : 0.001101----

(extended) Initial number : 0.010110001101----

Eight state : 0.011010----

(extended) Initial number : 0.0101100011010----

Ninth state : 0.110100----

(extended) Initial number : 0.01011000110100----

Tenth state : 0.101001----

(extended) Initial number : 0.010110001101001----

Eleventh state : 0.010010----

etcetera.

Let us summarize these results, and compare them with the results obtained earlier.

| First state : | 0.010101---- | L | 0.010110---- | L |

| Second state | 0.101011---- | R | 0.101100---- | R |

| Third state | 0.010110---- | L | 0.011000---- | L |

| Fourth state | 0.101101---- | R | 0.110001---- | R |

| Fifth state | 0.011010---- | L | 0.100011---- | R |

| Sixth state | 0.110101---- | R | 0.000110---- | L |

| Seventh state | 0.101010---- | R | 0.001101---- | L |

| Eight state | 0.010100---- | L | 0.011010---- | L |

| Ninth state | 0.101000---- | R | 0.110100---- | R |

| Tenth state | 0.010001---- | L | 0.101001---- | R |

| Eleventh state | 0.100011---- | R | 0.010010---- | L |

| etcetera. | etcetera. | etc. | etc. | etc. |

Also with respect to our new results, we see that the trajectory, while differing from the former one, remains within the statistical context determined by the evolution of the volume-like initial condition, the interval 0.0101 :

Trajectory from 0.010110---- :

0.010110----

0.101100----

0.011000----

0.110001----

0.100011----

So on the basis of all these results we can imagine that from the initial condition 0.0101 (and any other initial condition) many potential trajectories can emanate. Most of them will depart from an unspecifiable number within this initial condition. These trajectories are then, of course, totally erratic and will visit every interval of the 0 to 1 line segment however small, that is to say that in our case the clock doubling system visits every region of its phase space. All the possible trajectories remain within the statistical context formed by the evolution of the whole initial condition (the interval 0.0101).

Randomness is for us, i.e. epistemologically, first of all unpredictability.

It is said that unpredictability is fundamental, i.e. absolute, in the case of a highly unstable dynamical system. This is correct. Whatever refined measuring devises one may invent, such systems remain unpredictable as regards to long-term behavior.

But the assertion that this absolute unpredictability as such implies objective randomness, is not correct by reason of the fact that also in this case the system is unpredictable because we are not (and never will be) capable to assess its starting condition with infinite precision (that is required for such systems, that is to say for such highly unstable dynamical systems). And from this exclusively epistemological nature of even absolute unpredictability an objective randomness does not necessarily follow (while it could be present as such). This absolute randomness is only evident when we consider a trajectory of such an unstable system which starts off from (at least) an unspecifiable irrational value, provided such a system can -- in this respect -- be legitimately compared with the (mathematical) clock doubling system. And it is important to know that most numbers are unspecifiable irrational numbers. So our clock doubling system has shown that absolute randomness (as contrasted with randomness which emerges purely by ignorance) is in principle possible. Randomness is thus not inherently epistemological, not necessarily subjective, it can be real, i.e. it can be a real and inherent property of some given dynamical system.

Generally, a trajectory of the clock doubling system, starting off from the volume-like initial condition is erratic, and eventually visits every region of phase space however small this region is taken to be (See the Figure given earlier : If we would follow the trajectory still further, we will see that it visits every region of the 0 to 1 line segment, because the digits of the starting number are randomly added, expressing the fact that this number is, apart from its location in a given interval, unspecifiable). In this respect the trajectory is not impeded by its necessary remaining within the bounds of the statistical context or area (brown in the Figure, and determined by the [evolution of the] initial interval), because this statistical context will itself finally cover the whole 0 to 1 line segment (i.e. the whole of the system's

phase space). This "eventually visiting every region of phase space" is not because the stastistical context is eventually spreading all over phase space. The spreading of the statistical context or area does not refer to a single trajectory but to the whole of all potential trajectories emanating from the initial volume-like condition, and expresses the eventual loss of all predictability.

When the trajectory is finally allowed to visit every region (one after another) of phase space, the system can be said to be in equilibrium.

Let us determine when this is the case. The initial condition is a small area, defined by some interval, say, 0.0101 , as in the previous examples. A trajectory starts off from some sub-interval of this interval. This sub-interval, let it be (as in an earlier example) 0.010101 , is the (only) specified part of some irrational number that is unspecifiable beyond this sub-interval and which must be written down as 0.010101---- . When we now apply clock doubling, the resulting states are partly determined in the first five rounds (iterations). After those rounds the resulting states only consist of digits that were randomly added :

Trajectory from 0.010101---- :

0.010101----

0.101011----

0.010110----

0.101101----

0.011010----

0.110101----

0.101010----

0.010100----

0.101000----

0.010001----

0.100011----

etc.

Everywhere along the trajectory each state is determined by the previous state ( In an existing digit sequence the binary point is shifted one place to the right and the 1 before the point, when it appears, dropped). And the first few digits (appearing after the binary point) are determined by those already given in the initially given digit string of the starting number. But as soon as the binary point has passed those digits initially present, the chance of a 0 appearing after the binary point is precisely the same as that of a 1 appearing after the binary point (50 percent). This means equal probability for the jumping particle to end up in L (left compartment of teh 0 to 1 line segment) or in R . And this (as contrasting compartments with states) is perhaps a better criterion for the system's condition of equilibrium.

REMARK :

0. 0010----

Here we see some numbers appear for the second time, such as the number 0. 0101---- , but the number after its second appearance is different ( 0. 1010---- different from 0. 1011----). But in fact we can never speak of numbers like

0. 0101---- (or any other such number) as seen to repeat in some sequence, because these numbers are only partially known and remain so.

The clock doubling system, starting from an i r r a t i o n a l number, seems (but cannot) (within one Poincaré cycle so to say) to be able to visit some number for the second time. If so, the system enters into a loop, contradicting the irrational starting number. Let us generate a part of a trajectory starting off from an unspecified irrational number, using the same method as in the previous two examples :

0. 0101----

0. 1011----

0. 0110----

0. 1100----

0. 1001----

0. 0010----

0. 0101----

0. 1010----

0. 0100----

etc.

Because in the case of clock doubling we cannot speak about ordered and disordered states, we cannot speak in terms of relaxation or leveling-out. But this is evident because clock doubling is just a mathematical system. Its states do not contain energy or energy levels (to be leveled out). The only way we can introduce some sort of relaxation (leveling-out) is to see it at the statistical level. There we see that the evolution of the initial interval -- which, because it is a local island of positive probabilities amidst an ocean of zero probabilities, can represent 'stress' -- finally ends up in its uniform extension all over phase space. And this uniform extension can be interpreted as expressing a form of leveling-out or relaxation.

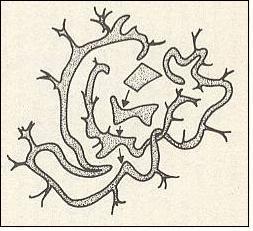

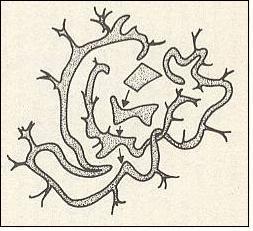

Figure above : Two-dimensional (in fact 3-dimensional) representation of the evolution of an volume-like initial condition of some unstable dynamical system. The initial area (or volume), representing positive probabilities of system states, eventually is going to extend all over phase space (at least in the sense that it is arbitrarily close to every point of phase space).

This could be interpreted as inevitable relaxation or leveling-out at the statistical level.

(Adapted from COVENEY & HIGHFIELD, The Arrow of Time, 1991)

From what we have learned from the clock doubling system and from the small digression into more-than-one dimensional systems, we can add some more things about areas and sub-areas in the phase space of unstable systems. See next Figures.

Figure above : Diagram of the initial condition as it is represented in the phase space of some dynamical system.

The ontologically smallest area that can represent the initial condition is indicated (red).

The mallest area that represents this initial condition insofar as it can be assessed by observation and measuring -- the epistemological limit -- is indicated (yellow) as being a larger area (containing the ontological limit).

Because of the volume-like character (as opposed to a point-like character) of the initial condition there is a multitude of potential trajectories emanating (one at a time) from this same initial condition according to a probablity distribution over these trajectories. One of these trajectories is shown (next Figure). The light blue area symbolizes the area of positive probabilities (i.e. probabilities greater than zero). This area increases (in at least the sense that it will eventually arbitrarily close to every point of phase space) as the time evolution of the system proceeds. See next Figure.

Figure above : Diagram of the initial condition and of subsequent system states as represented in the phase space of some dynamical system.

The ontologically smallest area that can represent the initial condition and the subsequent system states is indicated (red).

The mallest area that represents this initial condition and the subsequent system states insofar as they can be assessed by observation and measuring -- the epistemological limit -- is indicated (yellow) as being a larger area (containing the ontological limit).

The ontologically statistical context is a still larger area (light blue) -- containing the two smaller areas just considered (epistemological limit and ontological limit) -- indicating the positive probabilities of alternative system states.

The next Figure indicates another potential trajectory emanating from the same initial condition (while a different probability of actually appearing is assigned to that trajectory) :

Figure above : Same as previous Figure. Alternative trajectory added.

Because of the presence of an observational limit (epistemological limit, yellow) of the (assessment of the) initial condition, this initial condition as actually being observed, allows for still more potential trajectories. One of them is indicated in the next Figure. The greyish areas are the epistemologically statistical contexts or areas, following from the epistemological uncertainty (yellow) of the initial condition.

Figure above : Same as previous Figure. Alternative trajectory (purple), representing one of the potential trajectories implied by the epistemological uncertainty (yellow) of the initial condition. This trajectory remains within the evolving epistemologically statistical context (greyish area, including the light blue area).

If one assesses after the fact the state the system is in, one finds one out of the many potential small areas  (red + yellow) situated in any case within the relevant epistemologically statistical context or area (greyish), while at the same time it could turn out to be situated in the ontologically statistical context or area (light blue) (because this latter context or area is wholly contained within the former).

(red + yellow) situated in any case within the relevant epistemologically statistical context or area (greyish), while at the same time it could turn out to be situated in the ontologically statistical context or area (light blue) (because this latter context or area is wholly contained within the former).

The system starts from its initial state and then goes through a series of subsequent states. Four of such consecutive states are indicated in phase space and represented not by points but by areas. In fact what they represent is a successive series of points in time. And let us then say that the third one (just choosing one from the sequence) indicates time t3 (while the initial condition [initial state] marks time t0 ).

The following statements can then be made :

(representing a point, which in turn represents the system's state) at a definite location within the light blue t3 area (ontologically statistical area). The area

(representing a point, which in turn represents the system's state) at a definite location within the light blue t3 area (ontologically statistical area). The area  indicates the epistemological and ontological uncertainty as to precisely where in phase space the system is, i.e. as to precisely what state the system is in. It is the outcome of an observation or a measurement.

indicates the epistemological and ontological uncertainty as to precisely where in phase space the system is, i.e. as to precisely what state the system is in. It is the outcome of an observation or a measurement.

(representing a point [and its uncertainty], which in turn represents the system's state) at a definite location within the light blue t3 area.

(representing a point [and its uncertainty], which in turn represents the system's state) at a definite location within the light blue t3 area.

(representing a point [and its uncertainty], which in turn represents the system's state) indicating the location of the system in this epistemologically statistical area (of phase space).

(representing a point [and its uncertainty], which in turn represents the system's state) indicating the location of the system in this epistemologically statistical area (of phase space).

A Qualitative Description of the Evolution of an Unstable Dynamical System, as seen in its Phase Space.

We will now qualitatively describe the evolution of the initial probability volume (volume-like initial condition) in the phase space of some unstable dynamical system (It can also be mathematically described, but our mathematical background is not sufficient to do so. Apart from this, I think, that in particular a qualitative description is necessary for the categorical analysis of causality, as we see it at work in unstable dynamical systems. So without taking into account the mathematical description [involving the Liouville equation] I hope that my qualitative description is correct. Maybe some reader finds an error. I would then pleased to hear it from him or her.). The pictorial image which will guide us, is one that we have given already earlier :

Figure above : Two-dimensional (in fact 3-dimensional) representation of the evolution of an volume-like initial condition of some unstable dynamical system. The initial area (or volume), representing positive probabilities of system states, eventually is going to extend all over phase space (at least in the sense that it comes arbitrarily close to every point of phase space).

This could be interpreted as inevitable relaxation or leveling-out at the statistical level.

(Adapted from COVENEY & HIGHFIELD, The Arrow of Time, 1991)

The ensuing description refers to continuous real-world dynamical systems ( The clock doubling system, discussed above, is not a continuous system, but a discrete one [involving jumps] ).

The initial blob at time t0 represents a distribution of positive probabilities of certain very similar and highly ordered configurations (of elements), i.e. the initial configuration of system elements could be this or that highly ordered configuration. Outside this blob are configurations (system states) with zero probability at time t0 . When the system starts off, the maximally disordered potential configurations still have zero, or at least very low, probability of being materialized at some little time after t0 , because these maximally disordered potential configurations lie structurally still a long way off from the highly ordered initial configuration. See next Figure.

Figure above : Two possible configurations of the (16) elements of a dynamical system, representing two states of that system (each represented by a point in phase space). One is highly ordered (image on the left), while the other is highly disordered (image on the right). In order to go from the one to the other the system must go through a number of intermediate states.

So the highest probability of states directly coming after the initial state lies at the lower end of intermediately (moderately) ordered configurations, that is to say configurations, although less ordered, are still much like the possible starting configurations. They -- as a type, expressing a certain degree of order -- are nevertheless more probable to be materialized (i.e. it is more probable that one of them will be materialized) than these starting configurations themselves, because there are many more less ordered configurations than there are ordered configurations ( There are [already so mathematically] many more ways in which a configuration can be disordered than there are ways in which a configuration can be ordered).

In mixing systems (moderately chaotic systems) this end stage can be represented by a very delicate (as compared to the initial blob) structure (see Figure above ) with many tendrils reaching out to every region of phase space. And although its particular shape keeps on changing, its overall shape (i.e. a shape, just such that it reaches into every area of phase space) remains constant, with a very very low probability of the system temporarily falling back to one or another of the more ordered configurations (more ordered states represented by points somewhere in phase space).

What does this final overall shape of the probability distribution function, as it is represented geometrically in phase space (which has, in contrast to our simplified two-dimensional image, many dimensions), namely as the above mentioned delicate structure sending out tendrils to every region of phase space, mean?

Well, while the starting blob only represents a positive probability for a number of highly ordered and similar configurations (states), the end structure (as a morphological type that stays the same) comprises positive probabilities for (almost) every configuration whatsoever, whether ordered or disordered, because now the blob has spread itself all over the phase space of the system, and thus covering (almost) all of the system's potential states. Thus we can say that although at equilibrium each single individual configuration (possible state of the system) has a positive probability for it to materialize (which was not so at the beginning of the system's career), a maximally disordered configuration (that is to say one, or any other of the set of maximally disordered configurations) has (among these positive probabilities) the greatest chance to materialize, simply because there are many more of them. Said differently : While in the beginning the system can, as regards its next states, explore only a limited area (volume) of phase space, determined by its current statistical context (the currently allowed degree of expansion of the blob), at equilibrium it can explore the whole of phase space, because the statistical context has spread all over it. So the probability distribution function has changed from high probability of one or another ordered configuration (so set at the beginning of the system's career) to high probability of one or another maximally disordered configuration.

Expounded along these qualitative lines it is clear, I hope, that an unstable dynamic system of interacting (and colliding) particles, starting off from some highly ordered state, will, with a very very high probability, evolve to a state of maximum disorder. And, as has been said earlier, because we have argued -- from the assumed fact that point-like initial conditions (and any other point-like system state) not only cannot be determined as such (by us), but also cannot exist -- that the probabilities involved are objective and intrinsic, the ontological status of the particular outcome of the dynamical system is only secondary, while its probabilistic background is primary.

Later, namely in the next document, we will discuss a two-dimensional analogue of Clock Doubling, the so-called Baker transformation. This transformation will (together with considerations concerning correlations that originate from collisions of particles) show us the way to irreversibility and the arrow of Time, and therefore will provide more insight in the nature of Causality. It will be shown that the Second Law of Thermodynamics acts as a selection principle for initial conditions : Some types of initial conditions are forbidden by this Law and thus lead to exclusion, resulting in truly irreverible processes in the case of unstable dynamical systems.

Thermodynamics

Energy

Thermodynamics is about processes mainly from the viewpoint of energy transfer and energy conversion. But what is energy?

Although this definition is supportable only in mechanical terms, it is nevertheless the broadest possible definition.

[ Thermodynamics demonstrates that a given amount of heat cannot be completely converted into work. But this is not supposed to mean that only a part of a given amount of heat is energy. It does not mean that there are two sorts of heat, one convertible into work and the other not. Heat, as such, can be converted into work, and therefore heat is energy.]

There is a variety of types of energy encountered in thermodynamic systems of which we mention potential energy, kinetic energy, heat energy, electrical energy, and chemical energy.

P o t e n t i a l e n e r g y refers to the energy that a substance possesses because of its position relative to a second possible position. A stretched spring possesses potential energy and does work as it springs back (i.e. as it is allowed to unstretch). Similarly, water behind a dam possesses potential energy that can be obtained if the water is made to turn a turbine while going through the dam. The energy released by both systems (spring, dam) is transformed into kinetic energy of some object (the spring itself [or other things it draws with it], blades of a turbine) that is moving. Thus the energy possessed by a moving object is called k i n e t i c e n e r g y.

C h e m i c a l e n e r g y is the potential energy stored in chemical elements or compounds that can be released during a chemical reaction or a physical transformation. The chemical energy stored in elements and compounds determines the reactions that these substances will undergo. And most life processes can be discussed in terms of the storage and release of chemical energy. The chemical energy required or generated may be in the form of either heat or electrical energy. (OUELLETTE, R. Introductory Chemistry, 1970, p.15-16).

The considerations, regarding thermodynamics, that will now follow are largely taken from the textbook of R.WEIDNER, Physics, 1989, pp. 469, but also from some other sources.

Reversible and Irreversible Processes

In all the sections that follow, "the system" may consist of any well-defined collection of objects. The system could be a single spring, or a magnet, or some complicated gadget with many internal moving parts. Or the system can simply be an ideal gas (which is, to begin with, a diluted gas) in a container. Actually, we shall use an ideal gas to illustrate thermodynamic processes because the behavior of such an ideal gas is well known. Its equation of state is the general gas law :

Where V = volume, T = temperature, and p = pressure

This law says that V is proportional to the quotient of T and p ( the proportionality constant turns the proportionality relation into an equality relation).

A system is said to be in thermodynamic equilibrium when :

No heat enters or leaves the system in this irreversible process (Q = 0), and the gas does no work when it expands freely (W = 0) [ The gas does not increase its volume by pushing away a piston ]. Therefore, from the First Law, DELTA (U) = Q - W = 0 [ For work done on the gas this law reads DELTA (U) = Q + W, while for work done by the gas it reads DELTA (U) = Q - W ]. Since the temperature is directly proportional to the internal energy for an ideal gas, the free expansion represents an irreversible adiabatic expansion in which the temperature is the same in the final equilibrium state as in the initial state ( This free expansion can not be described, however, as an isothermal expansion, even though the initial and final temperatures are the same. The expansion proceeds irreversibly. During this expansion, thermal equilibrium does not exist and a temperature cannot be defined for the system [ When there is no thermal equilibrium, there are temperature differences between its parts or between it and its environment, so at least when its parts have different temperatures there is no definite temperature for the system as a whole ].

The above described free expansion of an ideal gas cannot be represented by a path in a pV diagram, but only by its begining and end points.

A reversible process must in general consist of a succession of infinitesimal changes taking place slowly, so that at each stage the system is in thermodynamic equilibrium. Then, and only then, can a temperature be defined for all intermediate stages.

A process is isothermal when during the process the temperature of the system remains the same.

A process is isobaric when during the process the pressure of the system remains the same.

A process is isovolumetric when during the process the volume of the system remains the same.

A process is adiabatic when during the process there is no exchange of heat between the system and its environment. This can be accomplished by thermally insulating the system from its environment.

Any isothermal process is reversible. The temperature remains constant throughout the system, and the system is always in thermal equilibrium as its state changes.

A non-isothermal process, on the other hand, may or may not be reversible. Consider an adiabatic process ( Here, a temperature change cannot be made undone by exchange of heat with the environment, so this is a non-isothermal process). If a process is to be both adiabatic and reversible, the process must take place fast enough that no thermal energy enters or leaves the system, but slowly enough that the system is at all times in thermal equilibrium. Such conditions can be achieved when the system is inside a good thermal insulator.

There are many examples of irreversible processes : a bursting balloon, a dropped egg, an overstretched spring, etc.).

A perfectly reversible process is virtually unattainable. In the real macroscopic world, all transformations are somewhat irreversible.

Consider an ideal gas undergoing a reversible process from some initial state ( pi , Vi , Ti ) to some final state ( pf , Vf , Tf ). According to the First Law of Thermodynamics, the heat Qif supplied to the system, the work Wif done by the system, and the change in the system's internal energy DELTA (Uif) in going from i to f are related by :

Both Qif and Wif depend on the process leading from i to f , but DELTA (Uif) = Uf - Ui does not. The internal energy of an ideal gas is a function of the state of the system, not of its history.

The next three Figures are about three possible reversible paths on a pV diagram (i.e. a pressure-volume diagram) between the same pair of initial and final states. The first two Figures are auxiliary Figures, serving to understand the third Figure, in which the three possible processes are depicted.

Figure above : Pressure-Volume Diagram depicting two possible states (black dots) of an ideal gas. The thin curved lines represent isotherms, which means that along such a line the temperature remains constant. There are two such isotherms, one representing a higher temperature, and one a lower temperature.

Figure above : Same as previous Figure. Initial pressure, final pressure, initial volume, and final volume indicated.

Figure above : Three reversible paths leading from state i to state f at a lower temperature.

(a) Isobaric expansion followed by isovolumetric cooling.

(b) Isothermal expansion followed by adiabatic expansion.

(c) Adiabatic expansion followed by isothermal expansion.

In the above Figure we see the three possible processes mentioned :

( First Law of Thermodynamics. If the work was done on the gas (instead of delivered by it), then this Law reads : Qif = DELTA (Uif) - Wif )

DELTA (Uif) is negative, so minus DELTA (Uif) is positive, consequently we have :

The three processes shown above are reversible. Indeed, a process can be represented by a continuous line on a pV diagram only if it is reversible. Only then does the system progress through a succession of states of thermal equilibrium with a well-defined temperature at each stage.

For an irreversible process, one can show the two end points (i.e. the initial and final point) on a pV diagram, but no continuous line can be drawn connecting them.

Now suppose that the three above processes are exactly reversed. The system now undergoes compression, rather than expansion. From state f to i , the internal energy change is now positive, heat is removed from the system, and work is done on it (volume gets smaller). Whenever a system undergoes a reversible process in which heat is converted to work, one may rerun the process to return the system to its initial state, with work then being converted to heat. The overall change in the internal energy over this special cycle is then zero. Moreover, since the overall process is represented by a single closed line (i.e. the system can go to and fro over that same line) on a pV diagram, rather than a loop, the heat entering the system over the entire cycle is zero, and the work done by the system over the entire cycle is also zero.

Before we will discuss the heat engine (which leads us to an understanding of the Second Law of Thermodynamics), we will discuss in more detail : The First Law of Thermodynamics, Isothermic processes and Adiabatic processes. The ensuing considerations are backed up by ENSIE (Dutch encyclopedia), Part IV, 1949, p.184-185.

U = internal energy of the system.

Ui = internal energy of system in initial state.

Uf = internal energy of system in final state.

U1 = internal energy of system in state 1.

U2 = internal energy of system in state 2.

T = (absolute) temperature.

W = work.

Won = work done on the system.

Wby = work delivered by the system.

V = volume.

p = pressure.

Q = transported heat.

Qh = heat supplied to the system (heat taken in by the system).

Qc = heat removed from the system (heat leaving the system).

dX = (infinitesimally) small increase or decrease of quantity X.

= summation of small quantities (say, dX) over the process-path (leading) from

= summation of small quantities (say, dX) over the process-path (leading) from

state 1 to state 2 of the system.

> = larger than.

< = smaller than.

The quantities Won and Wby already carry their sign, indicated by "on" and "by".

In the following text we do not distinguish between complete and partial differentials (among U, W and Q, only a small increase of U, that is to say dU, can represent a complete differential). All of these small quantities are indicated by d (as in dX ).

First Law of Thermodynamics

If we add to a system, for instance a cylinder filled with gas below a piston, a small amount of heat dQ , and at the same time perform a small amount of work dWon on the gas, for instance by compressing it, then, as a result, the internal energy U of the system must have increased by

If the amounts of Q and W are large, we must add all the successive small increases by integration. For U this is not necessary (we can just add up all the dU's ).

So if the change of the system is not small, for example because we add much heat, and compress the gas strongly, then we get :

where 2 and 1 are respectively the final state and initial state, and where the sum of the small changes in (internal) energy is

that is to say the energy difference between final and initial state.

This (i.e. equation (1)) is called the First Law of Thermodynamics, which formulates the law of energy conservation in the theory of heat.

From dU = dQ + dWon (First Law) we have dWon = dU - dQ, and from this in turn we have dWby = dQ - dU, which means that a system delivers work only when energy is transported into it. So the First Law says that work delivered by any system is not for free. A machine that could do this is called a perpetuum mobile of the 1st kind.

As a special case, for a process in which no heat is transferred (dQ = 0) into or out of the system, a so-called adiabatic process , the total work done on the system (and the process being an adiabatic compression) is equal to its energy increase :

If we want this adiabatic process to deliver work, i.e. when we have adiabatic expansion, we get :

Work is delivered at the cost of the system's internal energy :

The delivered work is positive, that is to say the left-hand part of the above equation is positive, and so is the whole right-hand part. U1 is the initial internal energy of the system and is therefore supposed to be positive. So we can conclude that in absulute values

U2 < U1 which means that also here the delivered work is not for free.

The next four Figures illustrate adiabatic and isothermal processes or transformations, still with respect to a gas contained in a cylinder under a piston.

Figure above : Adiabatic compression (from state 1 to state 2).

Work done on the system is equal to the surface (light blue) under the curve.

Adiabatic compression can be acomplished by pushing a piston (by a force K) in the indicated direction (i.e. from right to left), so that it compresses the gas. No heat can be exchanged with the environment, as indicated by the thermal insulator (yellow).

The applied work can be expressed in several ways :

K.dx =

K.dx =  p.O.dx = -

p.O.dx = -  pdV , where O is the surface of the piston, K the force applied to it and dV the change in volume.

pdV , where O is the surface of the piston, K the force applied to it and dV the change in volume. Adiabatic expansion is just the reverse of adiabatic compression. Now work is being delivered by the system. See next Figure.

Figure above : Adiabatic expansion (from state 1 to state 2).

Work performed by the system is equal to the surface (light blue) under the curve.

This work consists in the piston being pushed (by the system) to the right : Initially the gas is in a compressed state, then it expands spontaneously.

If we see the two above processes (adiabatic compression, adiabatic expansion) together, then we can describe them as follows :

In an isothermal process the temperature remains constant. In order to keep the temerature constant the system must be able to exchange heat with its environment. See next Figures.

Figure above : Isothermal compression (from state 1 to state 2).

Work done on the system is equal to the surface (light blue) under the curve.

The system is not thermally insulated. Heat Qc resulting from the compression (piston pushed to the left) is removed from the system, such that the temperature is to remain constant.

The reverse of isothermal compression is isothermal expansion :

Figure above : Isothermal expansion (from state 1 to state 2).

Work done by the system is equal to the surface (light blue) under the curve.

The system is not thermally insulated. Heat Qh is taken in by the system, such that the temperature is to remain constant.

If a gas in a cylinder undergoes an isovolumetric change (thus a process in which the volume V remains constant), then the total heat added to the system is equal to the energy increase, because now no work is done (See equation (1) [First Law of Thermodynamics] above ) :

This energy is called the heat function for constant volume. See next Figure.

Figure above : A volume of gas is heated. Because the piston cannot move (it is fixed in position) the volume of the gas remains constant.

Also in the case of an isobaric process (thus a process in which the pressure p remains constant) there is such a heat function.

Figure above : A gas is heated. The gas is under a displaceable piston. The weight on top of the piston exercises a force in the downward direction (red arrow). This force causes the gas to have a certain pressure. And because the force is constant, the pressure of the gas is constant. The added heat causes the internal energy of the system to increase and the system to perform work (piston is pushed upward).

If we consider small changes, then we have :

dU = dQ + dWon (First Law of Thermodynamics) (Work done on the system).

dU = dQ - dWby (Work performed by the system).

dQ = dU + dWby

dW = pdV (change of work is equal to pressure [which is constant] times change of volume)

dQ = dU + pdV

U + pV = H

dU + pdV (pressure constant) = dH (change of enthalpy)

dQ = dU + pdV = dH (change of enthalpy).

Because in an isobaric process the work is equal to pdV, which in our case is p(V2 - V1) (work done by the system, final volume becomes larger) we can derive the total heat added as follows :

We have found two heat functions :

Entropy

If we exert mechanical work on a liquid, for instance by letting blades turn in this liquid, then the liquid gets warmer (Also if we compress a gas adiabatically its temperature increases). But the same end state could have been reached just by heating the liquid (or the gas). So generally, in a series of changes, the end state does not determine the total heat supplied or the total work done on the system.

But the sum of the heat supplied and the work done on the system is determined, it is determined in virtue of the First Law of Thermodynamics (dU = dQ + dWon), because this sum equals the energy difference between initial and final state.

If we transform a gas with pressure p1 and temperature T1 by adiabatically compressing it, into another state with higher pressure p2 and temperature T2 , then we must apply more work than when we first isothermally compress and then heat the system at constant volume. See next Figure.

Figure above : The heat supplied to the system depends on the way, along which, from the initial state 1, the final state 2 is reached.

In the first case (adiabatically compressing the gas) there is no heat intake at all, while in the second case (first isothermally compressing and then isovolumetrically heating the gas) the netto heat added to the system compensates for the smaller amount of work applied to the system (Although the volumetric decrease is the same in both cases [suggesting an equal amount of work done], the pushing of the piston goes easier in the second case, because of the lower pressure involved, as can be seen in the above Figure ).

If the change of state is not small, then for a process holds that the sum of the added reduced heats is equal to the increase of entropy of the system :

This is the Second Law of Thermodynamics for reversible systems, i.e. the condition for this law (thus when it has an equality sign) to hold is : all intermediate stages of the process that are passed through by it must be equilibrium states, the process must be a reversible process. This will generally be the case where the processes are slow.

On the other hand, if this condition is not met, then the added reduced heat is smaller than the entropy increase

and so also (taking all the dQ / T 's together) :

This is the Second Law of Thermodynamics for irreversible systems.

So (more) generally the Second Law of Thermodynamics (in one of its many equivalent formulations) reads :

In words : The total of added reduced heats in any process, whether reversible or irreversible, is equal to, or smaller than, the entropy increase of the system.

Free energy

Just another thermodynamic potential is the free energy.

In the case of an isothermal process, in which the heat dQ is always added at the same temperature T, the sum of the added heats is, according to the Second Law, equal to

TS2 - TS1 . Let's see how :

Because any isothermal process is reversible, we have :

(Second Law for reversible systems)

(Second Law for reversible systems)

If we multiply both sides of this equation by T we get

We now introduce a new function (a new thermodynamic potential), the so-called free energy F = U - TS, which also (because U, T and S are) is a function of the state of the given system.

So the work done on the system in an isothermal process, can be determined as follows :

This is equivalent to

And because

we get

In words : The work done on the system is equal to the increase of free energy.

When the system performs work, then this work is equal to the decrease of free energy :

So in isothermal processes this free energy plays the role of the energy U in adiabatic processes, where we have :

For example an ideal gas that is compressed in a cylinder at a temperature equal to that of the surroundings, possesses an energy which is equal to that of the expanded gas at the same temperature ( The internal energy of an ideal gas does not change in an isothermal process [WEIDNER, Physics, 1989, p.479] ), but it has a higher free energy. And indeed we can let the compressed gas perform work by letting it expand isothermally, whereby it takes in heat from the environment (ENSIE, IV, 1949, p.186).

Qualitatively this might be (roughly) explained as follows :

On isothermal expansion of a gas, the temperature T remains constant, and this means that the average kinetic energy of the gas molecules (or atoms) remains the same after the expansion. Because we here are considering an ideal gas, we can disregard the interactions between the molecules, meaning that the energy of the gas is completely represented by this kinetic energy of the molecules. Not all of this energy of the gas, when it is in its (mildly) compressed state, is free energy (which as such is energy that can be converted into work). Only a part of the total energy of the compressed gas is free energy. On isothermal expansion heat is imported from the surroundings. And this heat compensates for (but is itself not totally converted into work) the loss of energy effected by the work done by the gas, i.e. it compensates for the loss of free energy.

We can elaborate on free energy a little more.

When an isolated system, like a gas in a well-insulated container, is in its most random state, it has achieved thermodynamic equilibrium. Then a single quantity, the maximum possible value of the entropy, is all that is needed to describe the macroscopic state of equilibrium and the end point of dynamic evolution. But for closed (possible exchange of energy with the environment, but no exchange of matter, for instance a bottle filled with gas or liquid) and open systems (both energy and material exchange is possible, for example an organism), which have an increasingly important dialogue with their surroundings, the state of maximum entropy must take into acount the entropy of the surroundings as well.

If a crystal is melting (which is an isothermal process) heat energy is taken up from the environment, and this heat energy does not increase the temperature. Instead it is used to increase the kinetic energy of the molecules, resulting in the fact that the crystal structure will be disrupted. If, on the other hand, a crystal is forming from a melt (which is also an isothermal process) this heat energy is exhausted to the environment again (where the entropy then increases). This is a nuisance if we wish to investigate the equilibrium properties of the crystal alone. For the sake of simplicity, we wish to avoid bringing the behavior of the environment explicitly into the discussion.

To exclude this environment, we can call on a new quantity, called free energy (as defined above), which assumes its minimum value at equilibrium. The free energy of a system represents the maximum amount of useful work obtainable from it. Although free energy is only a disguised form of the total entropy, its value is that it can be thought of as an intrinsic property of the crystal, thus removing the need to refer to what is happening in the environment. Free energy plays a central role throughout physics and chemistry in describing the equilibrium properties of systems, whether they be magnetic materials, refrigerators or chemically reacting mixtures.

Entropy and free energy are examples of thermodynamic potentials. By this we mean that their respective extrema -- the highest value of entropy and the lowest value of free energy -- reveal the position of thermodynamic equilibrium.

The extrema of thermodynamic potentials act as attractors for the system's evolution through time (COVENY & HIGHFIELD, The Arrow of Time, 1991).

As has been said, the free energy F is equal to U - TS, where U is the energy of the system and T is the temperature (measured on the Kelvin scale). This formula signifies that equilibrium is the result of competition between energy and entropy. Temperature is what determines the relative weight of the two factors.

At low temperatures, energy prevails (which means that it is predominantly energy that must go to a minimum), and we have the formation of ordered (weak-entropy) and low-energy structures such as crystals. Inside these structures each molecule interacts with its neighbors, and the kinetic energy involved is small compared with the potential energy that results from the interactions of each molecule with its neighbors. We can imagine each particle as imprisoned by its interactions with its neighbors.

At high temperatures, however, entropy is dominant and so is molecular disorder. The importance of relative motion increases, and the regularity of the crystal is disrupted. As the temperature increases, we first have the liquid state, then the gaseous state. As has been said, the extremes of thermodynamic potentials such as entropy and free energy define the attractor states toward which systems whose boundary conditions (isolated system, open system) correspond to the definition of these potentials tend spontaneously. Boundary conditions that correspond to entropy involve the isolation of the system from its environment, while those that correspond to free energy involve the openess of the system to its environment (PRIGOGINE & STENGERS, Order out of Chaos, Flamingo edition, 1986, p.126).

Free Enthalpy (ENSIE, IV, 1949, p.186)

In addition to the mentioned characteristic functions as entropy, energy, enthalpy and free energy, there is another one, which is called THE thermodynamic potential or free enthalpy G = U + pV - TS = H - TS (where H is the enthalpy).

Meaning of Entropy. Third Law of Thermodynamics.

The theory of heat gives the exact methods to calculate at any given state of a system the corresponding entropy. It teaches to compute how much the entropy increases if we raise the temperature at constant volume (isovolumetric heating), and how much it decreases if we decrease volume at a constant temperature (isothermal compression).

In this way the entropy of an ideal gas given by

where log is the natural logarithm, Cv the specific heat at constant volume, T the temperature, V volume and R the gas constant. The additive constant is undetermined and does not have physical consequences (ENSIE, IV, 1949, p.186).

Generally one can say : the higher the degree of disorder of the motions of the molecules, the higher the entropy. A way of seeing things which receives more of exact content in statistical mechanics. The solid phase (of a substance) at absolute zero temperature, the most ordered state that can be imagined, with stationary molecules or atoms regularly positioned at certain distances from each other, should then have the lowest entropy.

In 1906 Nernst has put forward the hypothesis, often called Nernst's theorem or also Third Law of Thermodynamics, that the entropy of all phases (solid, liquid, gas) of a given substance should have the same value at absolute zero temperature, which value was, later, by Planck, significantly, set equal to zero. Although experiments at absolute zero itself are not possible, it turns out that when one approaches absolute zero the theorem of Nernst is being increasingly satisfied. Quantum theory can explain this Law.

If we consider an isolated system, to which no heat is supplied (dQ = 0), then it follows from the Second Law, that for every process taking place in this system S2 - S1 is equal to, or larger than, zero, meaning that the entropy can only increase or at most remains constant :

If the Universe can be considered to be an isolated system, for which the Second law applies, then the entropy of the Universe as a whole can only increase (because most processes taking place in this Universe are irreversible), until a state of equilibrium, of maximal entropy, finally is reached (ENSIE, IV, 1949, p.186). But here the possible effects of gravity (which play a paramount role in the Universe as a whole) are not yet considered.

If we express the Second Law as meaning that the entropy always increases or at most remains constant, then it is only valid for isolated systems, whereas this Law expressed as meaning that the sum of the added reduced heats is equal to, or smaller than, the increase of entropy (see the equation given above ), is valid for all systems.

Heat Engines, Heat Pumps, and the Second Law of Thermodynamics

In what follows we will dig deeper into the concept of entropy and into the meaning of the Second Law of Thermodynamics. For this we must accumulate more insight into the Carnot cycle.

Much of the following is taken from WEIDNER, Physics, 1989, pp. 471.

To use the energy stored in chemical or nuclear fuels, such as coal, oil, natural gas, or uranium, one typically first converts the fuel's potential energy to thermal energy and then converts some of the thermal energy to work. Chemical or nuclear potential energy can be converted to thermal energy with 100% efficiency (for example, by oxidation or nuclear fission). We shall see, however, that a process in which heat is converted to work through the use of a heat engine operating over a cycle can never have 100% efficiency.

Before we consider how to achieve the maximum efficiency for a heat engine, we first note some general properties of any heat engine (such as a steam engine) and heat pump (such as a refrigerator).

A heat engine is defined as any device that in operating through a cycle, converts (some) heat to work and discards the remainder into a cold reservoir. A heat engine is always returned to its initial state in a cycle. Most ordinary heat engines contain a gas as the working substance. The heat engines we consider are idealized, they have no friction. But even if somehow no energy at all is dissipated in friction, an engine can never be perfectly efficient. The reasons are far more fundamental.

A heat pump is just a heat engine run backward. More specifically, a heat pump is a device that in operating through a cycle, converts work into heat, at the same time transferring heat from a low- to a high-temperature reservoir. A familiar example of a heat pump is, as has been said, an ordinary refrigerator.

The most general type of heat engine is shown schematically in the next Figure. We skip any mechanical details of construction.

Figure above :

(a) Generalized form of a heat engine.

(b) Energy flow for a heat engine operating between the temperatures Th and Tc .

This general engine, represented by a simple circle in the Figure, is a system into and out of which heat can flow, and which, when taken through a complete cycle, does net work on its surroundings. It converts (some!) heat to work. The engine may be connected to a heat reservoir at some high temperature Th . We suppose that this reservoir contains so large an amount of thermal energy that even as it loses or gains heat from the engine, its temperature Th remains unchanged. A second heat reservoir, also of large thermal-energy capacity, remains at the low temperature Tc .

Here W is the work out per cycle and Qin is the heat in per cycle. This definition makes sense in that it compares what we get out of the engine in useful work with what we pay for in heat in. Since we suppose that all the heat enters at the same high temperature Th , we can write Qin = Qh .

Now we're going to apply the First Law of Thermodynamics to one cycle.

The First Law is about energy conservation. It is not merely about engines. It generally says that the change of internal energy of any system is equal to the sum of the change of heat and the work applied to, or perfomed by, the system. If this change of heat consists in heat supplied to the system, and the work consists in work done on the system, then it says that the (resulting) increase of internal energy of that system (for instance a cylinder of gas with a movable piston) is equal to the sum of the supplied heat and the work done on the system (pushing the piston). For very small amounts it reads : dU = dQ + dW .

If the supply of heat does not take place during a longer time (implying -- if it did -- a successive series of different small increments of heat), but takes place more or less in a single moment, we can write for dQ :

DELTA (Q) or simply Q (instead of witing it with an integral  ), which then means the instantaneous supply of heat to the system. The same goes for the work done on the system : W is the instantaneous work done on the system (for example compressing a gas). The internal energy of the system then instantaneously changes. This change can be written by DELTA (U).

), which then means the instantaneous supply of heat to the system. The same goes for the work done on the system : W is the instantaneous work done on the system (for example compressing a gas). The internal energy of the system then instantaneously changes. This change can be written by DELTA (U).

So we can now rewrite the First Law (now referring to more or less instantaneous processes [heat intake, work applied] ) :

and emphasizing that the work W is done on the system :

Returning now to our heat engine, we have to do with work done by the system (here the engine), and then the First Law must be written accordingly :

which is equivalent to

Here Q is the netto heat added (which is what counts in the First Law).

In our heat engine this netto heat is Qh - Qc .

So Q = Qh - Qc .

And thus we get :

And because we consider one cycle, i.e. starting from initial condition i , and finally returning to that same condition, the netto change of the internal energy is zero, that is to say DELTA (U) = 0.

So we get :

which is of course equivalent to :

This says simply that work out equals net heat in.

Using this result in the formula (equation (2)) of thermal efficiency stated above we obtain :

This relation shows that an engine can have 100 percent thermal efficiency only if Qc = 0. An engine can be perfectly efficient only if no thermal energy is exhausted to the cold reservoir. A perfectly efficient heat engine would convert all the thermal energy entering it to work output when operated over a complete cycle, discarding none. This is impossible.

The reason? The Second Law of Thermodynamics. Like any other fundamental law in physics, it is confirmed by the circumstances that no exception to it has ever been found.

We shall encounter the second law of thermodynamics in several different but equivalent formulations. We have already encountered the second law as it relates to the behavior of a system of numerous particles, which always proceeds to states of greater disorder.

Our first statement of the second law of thermodynamics is as follows :

No heat engine, reversible or irreversible, operating in a cycle, can take in thermal energy from its surroundings and convert all this thermal energy to work.

That is to say :

Where Qh is the heat supplied to the engine, Qc is the heat handed over to the environment, and W is the work performed by the ideal engine (i.e. an engine with no friction).

For any cyclic engine, Qc > 0, and eth < 100 percent.

Consider now a heat engine run in reverse as a heat pump. See next Figure.

Figure above :

(a) Generalized form of a heat pump.

(b) Energy flow for a heat pump operating between the temperatures Th and Tc .

During each cycle, work W is done on the system, heat in the amount Qc is extracted from the low-temperature reservoir, and heat in the amount Qh is exhausted to the high-temperature reservoir. The net effect is that heat is pumped from the low- to the high-temperature reservoir. Note that the thermal energy Qh delivered to the hot reservoir is greater than the thermal energy Qc extracted from the cold reservoir (because W is added). This follows from the first law of thermodynamics. Let's see.

where Q is the netto heat taken in.

And when emphasizing that the work W is done on the system, we write :

And because we consider one complete cycle (where thus the system has returned to its original state) DELTA (U) = 0 (i.e. no net change in internal energy). So we get :

In the heat pump, heat in the amount of Qc is taken in (from the cold reservoir [see drawing above] ), while eventually more heat (i.e. more heat than was taken in) in the amount of Qh is exhausted (to the hot reservoir). So the netto amount of heat taken in is Qc - Qh . So Q = Qc - Qh (because Q [as it figures in the First Law as just stated] is the net heat supplied to the system [ In fact Q is just the supplied heat, but in the context of heat engines or heat pumps we must make a difference between initial heat intake and net heat intake).

And now the above equation is equivalent to :

which in turn is equivalent to :

where Qh is exhausted heat, and Qc is heat supplied to the pump. This formulation looks like, but is not the same, as the formulation given above for the second law.

Nevertheless we recognize this as the Second Law, because when we reverse all inherent signs of the terms, that is to say :

heat in ==> heat out, heat out ==> heat in, Won ==> Wby , heat pump ==> heat engine, we obtain the first formulation (of the second law) :

The heat pump is effectively a refrigerator. It removes thermal energy from the cold reservoir. If this reservoir were to have a noninfinite heat capacity, its temperature would fall.

No heat pump, reversible or irreversible, operating over a cycle, can transfer thermal energy from a low-temperature reservoir to a higher-temperature reservoir without having work done on it.

For any cyclic heat pump, Win ( = Won) > 0.

This statement of the second law tells us that if a hot body and a cold body are placed in thermal contact and isolated, it is impossible for the hot body to get hotter while the cold body gets colder (for this work is needed), even though this would not violate energy conservation, or the first law of thermodynamics. The observed fact that when a hot object and a cold object are brought together, they reach a final temperature between the initial temperatures is an illustration of the second law. Heat can spontaneously flow only from a hot body to a cold body.

The Carnot cycle

Lazare Carnot, the father of the french engineer Sadi Carnot (the latter : 1796-1832), who had produced an influential description of mechanical engines, concluded that in order to obtain maximum efficiency from a mechanical machine it must be built, and made to function, to reduce to a minimum : shocks, friction, or discontinuous changes of speed -- in short, all that is caused by the sudden contact of bodies moving at different speeds. In doing so he had merely applied the physics of his time : only continuous phenomena are conservative. All abrupt changes in motion cause an irreversible loss of the "living force". Similarly, the ideal heat engine, instead of having to avoid all contacts between bodies moving at different speeds, will have to avoid all contact between bodies having different temperatures.

The cycle for a good heat engine therefore has to be designed so that no temperature change results from direct heat flow between two bodies at different temperatures. Since such flows have no mechanical effect, they would merely lead to a loss of efficiency.

The ideal cycle is thus a rather tricky device that achieves the paradoxical result of a heat transfer between two sources at different temperatures without any contact between bodies of different temperatures. It is divided into four phases. During each of the two isothermal phases, the system is in contact with one of the two heat sources and is kept at the temperature of this source. When in contact with the hot source, it absorbs heat and expands. When in contact with cold source, it loses heat and contracts. The two isothermal phases are linked up by two phases in which the system is isolated from the sources -- that is, heat no longer enters or leaves the system, but the temperature of the latter changes as a result, respectively, of expansion and compression. The volume continues to change until the system has passed from the temperature of one source to that of the other.

Along these lines Sadi Carnot recognized that of all possible heat engines operating between two temperature extremes, the most efficient was a reversible one that would -- to describe it again -- operate as follows :

Figure above : A Carnot cycle, consisting of two reversible adiabatic and two isothermal processes, operating between the temperatures Th and Tc . The thin black curved lines are isotherms (meaning that along such a line the temperature does not change).

means temperature increment or decrement.

means temperature increment or decrement.

means heat increment or decrement.

means heat increment or decrement.

The area (light blue) enclosed by the loop is equal to the work W performed by the cycle.

Figure above : A Carnot cycle, consisting of two reversible adiabatic and two isothermal processes, operating between the temperatures Th and Tc . The thin black curved lines are isotherms.

The area (light blue) enclosed by the loop is equal to the work W performed by the cycle.

Earlier we spoke about the thermal efficiency of any heat engine over a complete cycle. So also for the Carnot cycle the thermal efficiency is (related to the heats in and out) as follows :

The thermal efficiency -- by the way -- of any reversible cycle, including the Carnot cycle, is independent of the working substance [steam, air, or whatever] ). So the ratio Qc / Qh does not depend on the working substance. Therefore, if the engine operates in a Carnot cycle, the ratio Qc / Qh can depend only on the temperatures Th and Tc at which the heat enters and leaves the system (In the two adiabatic steps there is no heat exchange at all with the environment (Q = 0)).

So we can write :

We could say that this is the definition of a (reversible) Carnot cycle, because it is one when this is the case.

By combining the two expressions, we can write the thermal efficiency of a Carnot cycle in terms of temperatures as

This equation gives the maximum (maximum, because it is about a Carnot cycle) thermal efficiency attainable for any engine operating between the temperatures Th and Tc . We see that it is 100 percent (eth = 1) only if the engine exhausts heat to a cold reservoir at, and remaining at, the absolute zero of temperature -- clearly an impossibility. It is important to realize that the impossibility of 100 percent efficiency, as established here, is not because of friction, because we here consider ideal engines, that is engines without friction.

Heat engines typically have very low efficiency. For example, if an engine takes in heat at the high temperature 2000 C and exhausts heat at room temperature of 300 C (Th = 473 K, Tc = 303 K), its maximum efficiency is eth = 1 - (303/473) = 36%. In any real engine, friction is present, the processes are not perfectly reversible, and the operating cycle is not a Carnot cycle. Consequently, the actual efficiency is even less.

Now we will show (WEIDNER, Physics, 1989, p.478) that the Carnot cycle is the most efficient of all reversible cycles operating between two fixed temperature extremes.

Consider the cycle shown in the next Figure (left image).

Figure above :

Left image : A non-Carnot cycle operating between Th and Tc .

Right image : The reversible expansion can be approximated closely by a series of adiabatic and isothermal expansions.

From point a , the system expands reversibly along the line ab (neither an adiabatic nor an isothermal path), as the temperature decreases from Th to Tc .

where Tc and Th are the temperature extremes of the working substance in the engine.

Entropy

Again we will elaborate on the so important thermodynamic variable, the entropy of a system. As will be pointed out further below, the entropy is a quantitative measure of the disorder of the many particles that compose any thermodynamic system.

First we will, starting with the Carnot cycle, reason our way to a definition of entropy (in fact a definition of entropy change), and from there we will, in a next Section, arrive at the formulation of the Second Law of Thermodynamics in terms of entropy.

Earlier we had established the following with respect to the Carnot cycle :

If the engine operates in a Carnot cycle, the ratio Qc / Qh can depend only on the temperatures Th and Tc at which the heat enters and leaves the system (In the two adiabatic steps there is no heat exchange at all with the environment (Q = 0)).

So we can write :

From this relation we can derive another very important relation by a simple mathematical manipulation :

The latter equation means that for a reversible Carnot cycle the ratio of heat to temperature (which ratio is called the reduced heat) is the same for both the isothermal expansion and isothermal compression ( In the adiabatic steps there is no Q ). Or in otherwords : The ratio of heat in and the temperature at which this heat was taken in (and at which isothermal expansion takes place), is equal to the ratio of heat out and the temperature at which this heat was taken out (and at which isothermal compression takes place). Or, also : In a Carnot cycle the intake of reduced heat Q / T is equal (in magnitude) to the exhaust of reduced heat Q / T. See next Figure.

In the analysis that follows we adhere to the following sign convention :

Heat entering the system is positive.

Heat leaving the system is negative.

Using this convention, we then have for the Carnot cycle

Thus, for a Carnot cycle, the sum of the quantities Q / T around a closed cycle is zero. This rule is actually more general. It holds for any reversible cycle, as we shall now show.

Figure above : A reversible cycle approximated by Carnot cycles (light blue, yellow, green).

Any reversible cycle can be approximated as closely as we wish by a series of isothermal and adiabatic processes. That is, a reversible cycle is equivalent to a series of junior Carnot cycles. We can, for example, roughly approximate the cycle in the above Figure by several adjacent Carnot cycles. The above equation

holds for each of these. Adding the equations for the individual small Carnot cycles that approximate the original reversible cycle, we have

We see that no heat enters or leaves the system apart from the processes at the perifery :

Q1 in, Q'1 out,

Q2 in, Q'2 out,

Q3 in, Q'3 out.

Therefore, we can write the last equation more generally as

where Q stands for the netto heat intake (Q1 in + Q'1 out, etc. [where the signs are already accounted for] ) and T stands for the temperatures at which intake or exhaust of heat took place. The summation is taken around the perifery of the original cycle. In the limit (i.e. when we have taken the smallest possible junior Carnot cycles, in order to obtain a most accurate approximation), we can then write

The circle on the integral sign indicates that the integration is to be taken around a closed path. We can call this integral a loop integral.

In words the last expression says that for any reversible cycle, the sum of the quantities giving the ratio dQ / T of the heat dQ entering the system to the temperature T at which the heat enters is zero around the cycle.

This is equivalent to saying that the integral (i.e. now the path integral) of dQ / T between any initial state i and any final state f is the same for all reversible paths from i to f . Let's explain this :

If we add up the two paths of the above Figure (paths, both starting from i and both ending up at f ) while, with our adding, starting at i , and going around the whole loop, and thus ending up at i again, we get, when we take the inherent directions of the two paths into account :

P1 + (-P2) [ = going around the whole loop] = 0

This is equivalent to

P1 - P2 = 0

which is equivant to

P1 = P2

So  along the path P1 equals

along the path P1 equals  along the path P2 between the same end points i and f .

along the path P2 between the same end points i and f .

We will now proceed further to arrive at a (macroscopic) definition of entropy (change) by using an anlogy : With a reversible cycle (a Carnot cycle or a non-Carnot cycle) we have to do with a process course going from some starting point and finally ending up at this same starting point again, and where the summation of some quantity is equal to zero. Precisely the same is the case of a conservative force. And this gives us an idea of how to define entropy (macroscopically).

So we exploit a mathematical property that obtains in the relation between a conservative force F (which is a vector) and the associated potential energy U of the system [ For example, the wind is a conservative force (field) : If we, when taking a ride, experience fair wind, we 'pay' for that in terms of unfair wind when we return (along whatever path) back to where we started from ]. The potential energy difference between two end points i and f is related to the conservative force by

where F (force) and r (way in the direction of the force) are vectors. If Uf is smaller than Ui , then we have a force. In the formula Uf is considered larger than Ui , so there must be a minus sign before the integral.

This relation can be written, however, only if the force is conservative and

with the net work (force x way) done by the conservative force equal to zero over a closed loop.