e-mail :

This document (Part XXIX Sequel-27) further elaborates on, and prepares for, the analogy between crystals and organisms.

Philosophical Context of the Crystal Analogy (IV)

In order to find the analogies that obtain between the Inorganic and the Organic (as such forming a generalized crystal analogy), it is necessary to analyse those general categories that will play a major role in the distinction between the Inorganic and the Organic : Process, Causality, Simultaneous Interdependency and the general natural Dynamical Law. This was done in the previous document. In the present document we will consider the category of Dynamical System. Thereby we have realized that Thermodynamics must be involved, and that, as a result, our earlier considerations of Causality may be in need of supplementation and amendment.

Introduction

The foregoing analysis of inorganic categories (previous document) did not consider actual ' t h i n g s ', i.e. the stable local dynamic patterns such as molecules, crystals, stars and the like. There it was about the continua, and with them the unlimitedness. Also the general simultaneous interdependency still has this trait. With the ensuing study of the natural (inorganic) dynamical systems this primacy comes to an end. They are the special cases of simultaneous interdependency. From now on we will consider the domain of bounded patterns, the domain of the finite, the domain of (intrinsic) things.

The (relatively) Stable Pattern (as subpattern and dynamic system, that stands out against the overall dynamic real-world background) as such must be understood in opposition to Process. It is akin to the State, shares its dissolvability in the process, but has the natural closeness and a certain constancy that distinguishes it from a mere state. A stable pattern is something that has external, but intrinsic, boundaries, an intrinsic shape, symmetry and promorph and an internal intrinsic structure. They are intrinsic beings. They are not being, but beings ( They were, by the way, the very subject of First Part of Website ). A thing is not necessarily an intrinsic being : An example of a thing, which (in this particular case) is definitely not an intrinsic being, is illustrated in the next Figure.

Figure above : Conglomerate. Found on the beach in northern Israel.

A pebble (length 3.6 cm) consisting of fragments of rocks cemented together. The fragments are not orderly distributed in the pebble. The latter once was a part of a larger formation, that had formed by deposition of coarse rock fragments. These (primary) fragments were once elements of a (geological) dynamical system. Later this formation of tightly cemented rock fragments was broken up again, resulting, not in these same fragments again, but in new fragments, each containing original fragments (i.e. the primary rock fragments mentioned above). Such a new fragment, eventually resulting in the one that is depicted here, came under the influence of running water (or blowing wind) itself containing many small but hard particles (e.g. sand grains) that have polished the new fragment, resulting in a smooth pebble.

It is clear that the external shape of this pebble is extrinsic, its causes were wholly external. Also its internal structure consists of irregular, randomly (with respect to the pebble and its shape) distributed primary fragments. There is no all-out intrinsic relationship between the pebble's (overall) shape and its internal structure. At most, only a few minor aspects of its internal structure have influenced its external shape. So it is clear, that although this pebble is a genuine thing , it is not an intrinsic thing, not an intrinsic being, but an extrinsic being. As it was found, it was an element of a dynamical system, which we could call "beach", but it is not itself a (complete and uniform) dynamical system. And as we know, certain other geological products can certainly be intrinsic things : crystals. If their individual development took place in a uniform medium, their internal structure fully determines their external shape. And that's why such a crystal is repeatable (i.e. it can originate again and again), while the pebble is unique, with respect to its (precise) internal structure as well as to its external shape.

An intrinsic being (or, equivalently, a dynamical system) has, as has been said, an external boundary and a shape of its own, it stands out from other co-ordinated beings and from its surroundings. It does not spatially or temporally fade out into something different. It maintains itself pretty well amidst the overall cacophony of the real-world flow.

Bounded area of simultaneous interdependency as dynamical system.

Much richer than the general simultaneous interdependency of the overall cosmos, is, therefore, the special simultaneous interdependency of the bounded intrinsic beings in themselves. At large distances this general simultaneous interdependency becomes vanishing small anyway. It is then a magnitude which can be legitimately neglected in considering local dispositions. On the other hand, in the narrow domains of limited but strong degree of interconnection, the simultaneous interdependency is the dominating factor. Here it condenses into a tight system of mutual conditioning. By virtue of the appearance of such domains, the special activity system -- the dynamical system -- rises up from the general simultaneous interdependency, rises up as intrinsic being. And with it also its special form of being distinguishes itself from the overall simultaneous interdependency as a new category. The name "dynamical system" means that here everything is based on the mutual force relationship of parts or members, and that consequently the unity and wholeness of this being is conditioned from within. The latter is also well expressed by the alternative and equivalent name "intrinsic being".

A mere state of a (local) process is just a simultaneity section. It hasn't duration. But if we look to the content of the state, its morphology, structure, etc., then, although in many cases the content also rapidly, but smoothly, makes place for another content, in many other cases a given content has a certain duration, that is to say, the content largely remains the same in a long sequence of consecutive states. Here either the (local) process runs slowly or its components sustain each other, resulting in a more or less constant overall structure or in a definite collocation of elements. Such 'states' (i.e. sequences of true states) do have a certain intrinsic resistence, in virtue of which they enjoy some stability.

The intrinsic being, characterized here as a more or less independent dynamical system, largely coincides with "substance + accidents" of First Part of Website . What we have here is some relatively constant substrate carrying properties that can replace each other.

Natural intrinsic dynamical systems (intrinsic beings).

When we call dynamical systems "intrinsic beings" they must be natural, which here means that their generation and their duration comes from within. So human artifacts, such a houses, spoons, and machines, are not intrinsic beings, because their generation and duration (maintenance) comes from without. On the other hand, a newly synthesized molecule is an entity that has generated itself on the basis of the properties of its constituents. What man has done in this case is just the setting up of the right conditions and then letting Nature do her job. But in a certain respect such a molecule is the product of the higher entity, the objective spirit, which has consciously intervened in the overall real-world process.

Although no one thing is truly independent, there are things (beings) that enjoy a relative independency with respect to the environment. Such a thing cannot, however, do without the environment, because with it it has to exchange matter and energy in order to be generated and sustained.

From all this it should be clear what kind of inorganic things are intrinsic beings. They are cosmic entities like stars, galaxies, spherical star heaps, and other more exotic entities. Further they are single crystals (sterro- and rheocrystals [i.e. solid and liquid crystals] ) and maybe things like raindrops, oildrops and flames, and other self-sustaining chemical systems ( like the famous Belousov-Zhabotinsky Reaction) (See for all this, First Part of Website, referred to earlier). In the organic domain the self-containedness of certain dynamical systems is even much more pronounced : individual organisms.

Dynamic boundary delineation in dynamical systems (relatively independent beings, intrinsic beings).

Knowing that the general simultaneous interdependency comprises all spatially co-existing entities, it is clear that the basic phenomenon in dynamical systems is that they draw for themselves a boundary of some kind at all, by which they contrast themselves against other co-existents. They mark themselves out 'against' the general simultaneous interdependency. However, they cannot do so by cancelling out the latter within their own spatial range. They do it rather by outstripping the broader active dynamical coherence that pervades them, by the force of internal connectedness. By virtue of this they contrast themselves from the overall wholeness in which they stand, as intrinsic things, without withdrawing from its broader connection with the rest of the world. They stay within it, while at the same time contrasting with it. They do not cut off the threads of the all-pervading overall simultaneous interdependence, but outstrip them as a result of the fact that their internal coherence, within the limits of its range, dynamically surpasses the coherence of the next higher whole.

So the marking out, or boundary, of a dynamical system is a function of its internal forces insofar as these oppose the dissolving influence coming from without. The external shape of such systems is thus not secondary, is not an extrinsic matter for them, but is eminently intrinsic. It is the essential shape for it, determined by itself, and maintained by it against deforming influences.

This phenomenon of dynamic delineation, of dynamic boundaries, as distinguished from merely material boundaries, is clear when one realizes that the boundary (delineating the system from its surroundings) in the majority of dynamical systems does not form sharp spatial surfaces, but that these systems spatially more or less fade out into their surroundings. This is, for instance, the case in all larger cosmic systems like galaxies and spherical star heaps. We cannot unequivocally indicate a sharp external boundary, and even when we could indicate with certainty the outermost members of such a (large cosmic) system, they would not form an external boundary, but rather be mere outposts of the system. The unity of such systems is only apprehensible from within, and only from a central zone outwards one can distinguish zones of lesser density (because when we want to start from the outside we wouldn't know where precisely to begin). In reality, however, this spatial sectioning is already based on an intrinsic dynamical coherence. In the mentioned cases this is the system's own gravitational coherence.

Perhaps one can say that solid bodies (which have definite surfaces as their boundaries) are more or less rare in the cosmos anyway. The large mass of matter in space is probably gaseous, be it extended in nebulas across large distances, be it condensed into spherical gas balls. Among the natural inorganic dynamical systems, perhaps only the (single) crystals can be considered to be solid bodies, sharply delimited with respect to the surroundings. When one, however, considers their complicated conditions of generation, involving special partial conditions in much larger systems, they also seem to be less independent. On the other hand we must realize that a crystal of the same species can be generated in a variety of (geological or chemical) conditions, making it possible to unearth the strict conditions (the absolutely necessary and sufficient conditions) of their generation, and then they turn out to be self-contained, that is to say they turn out to be genuine intrinsic beings.

In all cases of genuine dynamical systems, representing intrinsic beings, there always is a broader overall dynamic coherence that works through the given dynamical system and beyond it, but which is not as such differentiated within that dynamical system, because, although it is not neutralized, it is surpassed by the intrinsic coherence. The dynamical system only comes about by this non-differentiation (while at the same time developing it). That's why its external form is determined by its dynamic boundary zone.

For the overall coherence of the cosmos the effect of such dynamic delimitations -- also there where they occur in a relative way [the system becoming larger or smaller according to the degree of strength of the overall force field] -- is the division of the cosmic whole into dynamical domains or ranges, and with it the articulation of the cosmos into relatively stable closed beings (things, objects). The structure of the physical world is not based on the pervading continua only, also not on pervading lawfulness alone, but also essentially on the delimitation of closed beings. That is one of its basic rules. Delimitation and closeness are, however, functions of dynamic undifferentiation of the overall simultaneous interdependency inside dynamical systems ( HARTMANN, 1950, p.456 ). On this the primary discretion of the physical world, codetermining all the more special articulation, is based. The large mass of secondary systems rests already on the primary dynamical systems.

Yet another rule determining the structure of the cosmos becomes evident : The largest intrinsic beings, that originate in this way, are in no way always the highest. They are neither necessarily the most differentiated, nor necessarily the most stable. With respect to differentiation (internal diversity) the cosmos as a whole is an instructive example : It is built upon some few dynamical foundations, but already with respect to the structure of the Earth, although the latter is vanishing small as compared with the cosmos as a whole, the basic structure of the cosmos falls short. And even still higher forms of dynamical systems, but much smaller, can be found on the Earth's surface. Surely one should not conclude the other way around : Also the smallest intrinsic beings (dynamical systems) are not the highest, although enjoying a high degree of stability. The truly highest forms seem to lie in moderate orders of magnitude. For this the domain of organisms speaks for itself. But these systems are already not mere dynamic anymore ( HARTMANN, 1950, p.457 ).

General theory of dynamical systems.

With the dynamical system, especially with the "totality-generating dynamical system", we have -- as it was already found out earlier -- arrived at intrinsic beings.

While the states of a causal process as such, and even those of a regular causal process as such, can together form a continuously changing sequence without some specific content temporarily, but nevertheless definitely, enduring, the dynamical system, on the other hand, either is a local and finite unity, delimited from the overall simultaneous mutuality or interdependence, and as such being an intrinsic being, or generates one (or more) intrinsic beings. Anyway, the process, that we call "dynamical system" always involves intrinsic beings, while a causal process as such not necessarily involves intrinsic beings, because the states in such a process could be just fragments of intrinsic beings, or an aggregate of intrinsic beings. In the dynamical system the states are, or eventually become, a whole intrinsic being (when a dynamical system produces many intrinsic beings, we -- in our considerations -- concentrate on just one of them).

And with the dynamical system, and, by implication, with intrinsic beings, we have, in our theory of category layers, finally arrived at the category of Substance, in the sense of the Substance-Accident Structure of an intrinsic being.

We, accordingly, consider Substance in a different way than HARTMANN, 1950, pp.280, has done : While the latter considers substance not exclusively in connection with intrinsic beings, we do so consider it.

Substance in our view is that (having still content) what remains the same during (accidental) change of an intrinsic being, and which is at the same time a substrate (i.e. 'carrier') of those determinations that do not belong to the intrinsic being's essence. These determinations are collectively called 'Accidents'. And the Essence of such an intrinsic being is the physically interpreted dynamical law (and nothing else and nothing more) of the dynamical system which is or generates that intrinsic being.

So what we have done is interpreting the Aristotelian-Thomistic Substance-Accident structure dynamically (as also HARTMANN has done, but not in the context of the classical substance-accident metaphysics (Aristoteles, St Thomas Aquinas). As has been said this is fully developed in First Part of Website .

The dynamical system is a principle of discretion (in contrast to continuity) and of the finite. With this, one comes closer to the observable. Categories of the finite should be the preferred categories of sight (not necessarily that of the eyes alone) (Anschauung). But the domain of the finite is still an infinitely wide domain. Only a small section of it is given for observation and experience : as regards order of magnitude a section of moderate things. And exactly this section is almost entirely devoid of natural dynamical systems (intrinsic beings). It mainly consists of fragments, parts and artificial things, at least so when we do, for just a moment, not consider crystals and organisms. The latter are, or are products of, genuine finite dynamical systems. They have, what HARTMANN calls, a central core (in German : Inneres). And this central core we call the Essence of the intrinsic being.

In itself, observation and experience are more than anything geared to apprehend wholes. But these are only wholes of sight, of image, of impression, not of ontological structure. And nothing comes more close to the naive consciousness than to attribute to each and every thing a central core, and interpret such a thing as something that is independent. This consciousness bestows soul, and anthropomorphizes recklessly. But this bestowed central core is just something made up, not something natural, real and dynamical.

With respect to all this, we can say : Wholeness and Central Core are within the confines of consciousness hybrid categories (i.e. not pure categories).

The incipient understanding should first run down its copious proliferation, in order to arrive at ontological wholeness and natural central core. For naive consciousness is, in virtue of its 'thing-idea', almost exclusively about fragments and parts. It does not see the natural wholes. And where they are at last shown to it, it doesn't distinguish them as such. Anyway, the 'thing-consciousness' is only seemingly concrete. In fact it floats, without knowing it, over and above, and away from, the true diversity of things. It is blindly isolating and generalizing, and thus in this respect an abstract consciousness (i.e. abstractly apprehending things). Categorically expressed : Its wholes lack the central core that legitimately belongs to them, and of which these wholes are the outside, and this irrespective of whether they have it inside or outside them (i.e. whether the wholes, as seen by naive consciousness, have their true central core within them or outside of them). In short, the viewpoint of the dynamical system is lacking in naive consciousness.

The inorganic world shows us a hierarchical system of dynamical systems, that is to say, the elements of a given dynamical system can themselves be dynamical systems, while the given dynamical system can be an element of a still larger dynamical system.

The elements of a dynamical system are, seen in a first approximation, the passive carriers of the system as a whole of which they are elements. They indeed play here the role of 'matter'. Their function in the system is as such a dynamical precondition. But this does not exhaust them, for they become, as existing within the system, its 'members' with a specific function. And in virtue of this 'member function', which they do not possess all by themselves (i.e. do not possess in virtue of what they are in themselves), they become something different from what they were. So the atom in the molecule, the atom in the crystal, the molecule in the raindrop, have different functions as when existing in isolation. So also the planetary body in the solar system, the star in the system of a star heap, etc. Whether the element also occurs freely or not, is initially immaterial : It is not the literal 'addition' of the member function that counts, but only the dynamic origin of this function in the system.

The member function of the element contrasts with its matter function. As matter it determines the system (of which it is an element), it therefore is a determining factor, albeit a subordinated one. As member, on the other hand, it is determined by the system as a whole and is subject to the centralized determination of the system.

HARTMANN, 1950, pp.457 discusses the internal dynamics and stability of dynamical systems.

However, since 1950 a great deal of work is done and discoveries are made in dynamical systems, especially in the context of the modern chaos theory. So we here temporarily leave HARTMANN, and turn to these relatively recent considerations. But this is already extensively done in First Part of website , so the reader should, if necessary, consult the many documents on dynamical systems, where they are largely explained by means of computer simulations (Cellular Automata, Boolean Networks, L-systems, etc.).

One of the documents there gives a general exposition of dynamical systems and their ontological status, especially with respect to the Substance-Accident structure of intrinsic beings : Non-Classical Series, "Metaphysics and Dynamical Systems".

Because its considerations are highly relevant in the present context, we reproduce it here in full [with some extra indications between square brackets] :

But to clarify and deepen those observations we need something to know about DYNAMICAL SYSTEMS. Of course that means : natural dynamical systems, i.e. concrete, material, physical or biological systems. We shall discuss them. But we must emphasize that those natural systems are generally very complex, and only partially understood, especially the biological ones. Therefore we shall concentrate our expositions on abstract dynamical systems in the form of computer simulations. Which in fact means that when we discuss real dynamical systems, we are inspired by those abstract systems. Such systems are fully defined, and because of that better understood. Maybe they can supply us with the proper concepts needed for a revised Substance-Accident Metaphysics. We must thereby keep in mind, however, that those simulations are relatively simple and are not able to supply all the concepts needed.

By means of the study of dynamical systems we hope to find out more about the status of Substance, Accident, Essence, Individual, the per se and the per accidens. Maybe we can do this by means of conceps like Dynamical Law, System State, Initial Condition, System elements, Attractors, Phase-portraits, Attractor Basin Fields, Dynamical Stability, and so on, which in fact means the following : an ontological interpretation of those concepts.

"Ontology " means here : The study of Being as such, the way of being of an individual thing, the status of being of the Essence, the status of being of the Universal, the status and way of being of properties in relation to Substance, the status of a process in terms of being or becoming, etc.

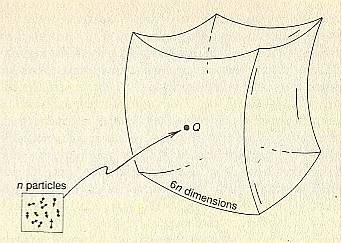

A DYNAMICAL SYSTEM is a process that generates a sequence of states (stadia) on the basis of a certain dynamical law.

Such a dynamical law, together with a starting-state (initial condition) is already the whole dynamical system.

When the system states together form a continuous sequence, the dynamical law will have the form of (i.e. will be described with) one, or a set of, differential equations, which describe, and dictate as a law, the changes of one or more quantities in time (and in space), and so (describe and dictate) the continuous sequence of states.

When, on the other hand, those states together form a discrete sequence, then the dynamical law will express a constant relation between (every time) the present state and the next state, and this relation is of such a nature that no infinitesimals are involved (in other words, the differences between successive states are nowhere infinitely small).

Every process state (system state) is a (certain) configuration of, ultimately, system elements , which (configuration) is generally different with each successive process state. The dynamical law according to which those configurational changes proceed, is immanent in the (properties of the) system elements (For where else should it be seated?). The changes in the element configuration, and so also the (implied) succession of process states, is the effect of (i.e. is caused by) interactions between system elements (In such a process it is possible that some system elements disintegrate to other elements or form compounds with other elements, and then those products will interact with one another). These interactions are the concrete embodiment of the dynamical law in action.

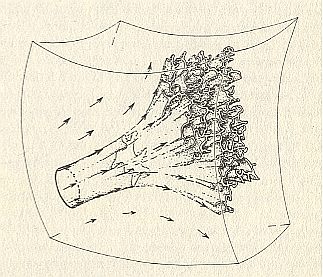

The changes of configuration could be such, that we can (after the fact) speak of SELF-ORGANIZATION of the system elements towards a coherent stable PATTERN. This pattern can be a final configuration of the system elements, to which the system clings, i.e. never leaving this configuration anymore. But the above mentioned pattern can also be a dynamical pattern, which also is coherent in itself, but which moreover alternates in a regular and coherent way.

Both cases of self-organisation can be interpreted as the formation of an organized whole, and this we will call a Totality (in all cases of real systems, that means a Uniform Being ), especially when the generated (dynamic or otherwise) pattern shows an intrinsic delimitation (a boundary) with an environment. When this all happens we speak of a Totality-generating dynamical system.

An ontological interpretation of a macroscopic Totality (a uniform thing) in terms of dynamical systems will proceed along the following lines :

We will first of all presuppose (the presence of) a Totality-generating dynamical system.

The process stadia of such a system now imply the corresponding process

stadia of the Totality. A process stadium of a Totality is the ' Here-and-now Individual ' -- we can call this also : the Semaphoront -- while, all those stadia taken together, form the ' Historical Individual '.

Generation of a Totality means that the system elements, or a part thereof, together form a coherent whole, a totality-resultant of the dynamical system, coherent, either in space, or in time, or in space and time, and having an intrinsic boundary with an environment. So not just any (arbitrarily chosen) process (dynamical system) generates a Totality. Especially for abstract ' dynamical ' systems -- which are moreover just simulations of dynamical systems -- applies the following : They cannot supply all the revised metaphysical concepts, needed for the establishment of a revised Substance-Accident Metaphysics. The dynamical systems that are involved in the generation of full-fledged Totalities thus have some special properties.

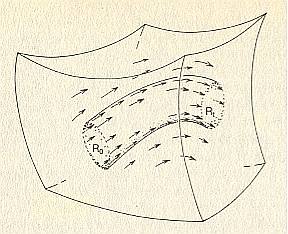

Every process stadium is a certain configuration of system elements and can be considered as an initial state , meaning that the system will be observed from that state onwards.

The real , actual (= ' historical ') initial state also is a configuration of system elements, but as configuration it originates from outside the system. It could even be a configuration which in principle cannot be generated by the system from other configurations (i.e. from other states). Such an actual initial state can also be random (i.e. a random arrangement of elements, or / and random states of the elements themselves). But the system can transform this random configuration, once given, into a (following) PATTERN (= a not-random configuration), and in turn into a next pattern, etc., and so leading to a sequencing of patterned process stadia. In other words the system is then able to organize the constituents into a real pattern. The elements (constituents) are going to take part in (the formation of) a Totality. The sequence of process stadia, taken as a whole, also can be considered as a pattern when it gives a reason to do so. But a real Totality is only formed when such a pattern has a, for the system intrinsic, boundary with an environment.

A local, individual action originating from the environment, thus coming from outside a running dynamical system, can be considered as a perturbation of a current process state. The perturbation then creates, as it were, a new initial state with respect to that process.

The relevant properties of the constituents (the system elements) of that process determine the nature of their interactions. Thus that which determines those interactions as such and such (a way) taking place, is immanent with respect to the constituents. The whole system, and thus the whole process, is further constrained by the general (global) state of the environment and thus by the general nature of physical matter, described by Natural Science in the form of general -- i.e. everywhere operating -- Natural Laws. These global (i.e. operating on a global scale) Laws of Nature are immanent, i.e. inherent in the general properties of physical matter.

The mentioned -- (mentioned) with respect to the taking place of the interaction process -- relevant properties of the system constituents can also in this non-global case -- thus in the case of a special process, taking place somewhere, generating a Totality -- be interpreted as a law, namely the law that is valid for specifically that (type of) dynamical system : The Dynamical Law. This law is, as has been said, immanent in the relevant elements of the system. The pattern, i.e. the arrangement -- at a certain point of time -- of these elements is extrinsic with respect to that Dynamical Law. It even could, as have been said, be an arrangement (configuration of system elements) which cannot even in principle be reached by the system itself from whatever initial condition. Such an unreachable state, which as such can only originate from outside the system (= from outside being imposed on the system), is called a ' Garden of Eden State ' of the system (Theoretical models learn that many systems each for themselves have a large proportion of such Garden of Eden States). Such an unreachable configuration (of system elements) either is a real starting state of the dynamical system (i.e. the system happened to start just with such a configuration), and so is coming from outside the system, or such a state is the result of a perturbation, which took place at some point in time during the running of the system, and so is also coming from outside the system, a perturbation of a process state (situated) higher up in the sequence. Thus by actions from outside, a current process state, itself also being a configuration of system elements, can be changed, resulting in a new, i.e. other, configuration of system elements, which then functions as an initial state with respect to the further history of the process.

So a dynamical system implies a number of types (meanings) of : " outside the system " :

Before we explain these two ways, something must first be said about the status of element :

With " elements coming in from outside the system (and therefore quasi elements) " I mean elements which are not imported by the dynamical system itself, but which, by accident end up within the active domain of the system. If a Totality, or more generally, a pattern (of a higher order than that of the system elements themselves) is being generated within the active medium of the dynamical system, then it is possible that the elements belonging to that active medium, as well as possibly elements coming from without (that active medium), are going to participate in the formation of the Totality (the unified pattern, being generated by the dynamical system). The insertion into that Totality, in this last mentioned case (elements coming in from without), does not happen by virtue of the Dynamical Law of the relevant dynamical system, but is a perturbation from without. The present context is concerned with the effect of elements-coming-from-without on the stability of the dynamical system.

Now we are ready to discuss the above mentioned two ways by which the system tries to maintain itself :

If a given real dynamical system generates a full-fledged Totality, for instance (generating) a crystal from a solution, then this Totality is a Substance (in the metaphysical sense of the term), more specifically, it is a First Substance.

The Essence or (ontological) Second Substance, is the Dynamical Law of such a system.

All the observable properties of such a Totality are generated by the system. These properties are called Accidents (although they do not all have a status of 'generated by accident' ), and will be all kinds of quantitative properties like length, volume and the like, but also qualitative properties like configuration (which can end up as colors, densities and the like).

All these observable entities, the First Substance and its properties, are, as has been said, generated.

Borrowing terms from Genetics, we could say that those generated entities are seated in the 'phenotypical' domain (a domain of being, a way of being), while the corresponding Dynamical Law is seated in the 'genotypical' domain (another domain of being, another way of being). We discriminate between these domains, because the Dynamical Law as such is not observable. It abides in the collection of system elements, i.e. it is dispersed over those elements, without being the same as those elements because it is only dispersed over some (not all) aspects of every system element. Therefore the Dynamical Law is neither a thing, nor a property. It is abstract.

The First Substance and its properties are, on the contrary, concrete and directly observable.

The mentioned accidents belong to that first substance of which they are accidents. Some of them belong to it per se, others only per accidens.

All those accidents together make up the first substance [NOTE 2]. They can only exist as a first substance. They cannot exist on their own, because they are, each for themselves, just a determination of a first substance.

Remark : A different initial condition does not correspond to a different settling pattern in a per se manner. So, often a certain set of different initial conditions exists, each member of which bringing the system to the same settling pattern. But such a set need not be the total set of possible initial conditions with respect to the system.

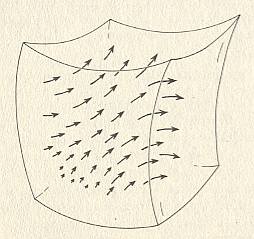

The total set of states, belonging to, and arranged according to, all possible trajectories, all leading to a certain attractor, say attractor A(1), forms, together with the attractor states themselves, the basin of attraction of the attractor A(1) (analogous to all the rivers that drain a certain area and all end up in the same lake).

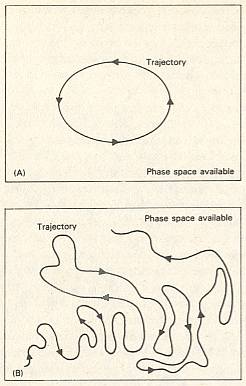

The total of all possible basins of attraction belonging to (i.e. corresponding to) a certain dynamical system is called the phase portrait (this term is used in the case of continuous systems) of that system, or the attraction-basin field (term used for discrete systems).

The attraction-basin field represents all possible systemstate-transitions of that dynamical system, and is, in a way, equivalent to the Dynamical Law of that system.

The Dynamical Law is the system law (seated) at a low structural level, while the corresponding attraction-basin field is this same law, but now (seen) from a global structural level.

There exist dynamical systems, for instance abstract Boolean Networks (and their real counterparts), where the dynamical behavior depends on a whole set of dynamical laws, but which nevertheless have only ONE attraction-basin field. It seems reasonable to interpret this attraction-basin field as THE (one) Law of the system, and so also as the Essence of the Totality (when a Totality is indeed generated by the system). But of course the mentioned set of dynamical laws can also be interpreted as THE (one) Dynamical Law and so as the Essence of the (generated) Totality.

Just the set of all possible system states corresponding with a certain dynamical system is called the phase-space (term used for continuous systems) or the state space (term used for discrete systems) of the system. The system thus organizes its state-space into (a relatively small number of) basins of attraction, the attraction-basin field, by establishing all its possible state transitions (which means that the possible states are now related to each other in a specific way). And so the dynamical system ' categorizes ' the state-space and because of that the resulting attraction-basin field can be considered as the ' memory ' of the system, especially when such a system is a Boolean Network. A Boolean Network is a discrete dynamical system with only two-valued variables. Such systems constitute a possible basis for the study of genetic and neural networks (See the Essay on Random Boolean Networks [ First Part of Website, Non-Classical Series ] ).

The above given interpretation of the notions Totality, Identity, Essence, Here-and-now Individual ( Semaphoront ), Historical Individual, etc. is inspired by the study of simple abstract models of dynamical systems (in the form of computer simulations), which pretend to represent processes, and aspects thereof, in the Real World, especially those processes which show self-organisation of system elements towards stable coherent patterns. Such are for instance crystallisation processes and ontogenetic processes (the last mentioned are processes relating to the formation of an individual organism).

But we must realize that we, in proceeding along these lines, make use of formidable simplifications of natural real processes (natural real dynamical systems), resulting in such models, i.e. reducing them to such models. This is, according to me, inevitable because the processes in the Real World generally are much too complex and much too strongly interweaved and intertwined with other processes, as to allow directly from them (i.e. them serving as a theoretical point of departure) a definitive ontological interpretation of such Totality-generating processes. The models must be part of the point of departure of such an attempt to an ontological interpretation.

In the present context (theory of category layers) "dynamical system" is a general category ( If / Then constant) of the Inorganic Layer : Every concretum in this Layer is determined by it : Everything in the physical domain is either a dynamical system (or more of them), or is a fragment of one or more dynamical systems. Only a being that is one single dynamical system is a genuine intrinsic being.

|

About the Analogy between Crystals and Organisms (or, more generally, between Inorganic and Organic) ( Analogy and Over-forming ) |

|

It is perhaps useful to remind the reader whereto all this is supposed to lead. |

| Let us symbolize the phenomenon of over-forming by a 'core' ( = that which becomes overformed) which is 'clothed' by something else ( = that which overforms the core) :

With this phenomenon of over-forming a fundamental difference, viz., the difference between that what becomes over-formed and that which is the result of the over-forming, is involved.

The common possession of the categorical core,  , in category change, i.e. over-forming, is assumed by HARTMANN only for all general categories that he has established for the Inorganic. All other new features that appear in the domain of organisms he assumes to be determined by n e w categories, not occurring at all in the Inorganic. So HARTMANN is expected to deny any more or less far-reaching crystal analogy (and, more generally, any far-reaching Inorganic-Organic analogy), which can be symbolized by : , in category change, i.e. over-forming, is assumed by HARTMANN only for all general categories that he has established for the Inorganic. All other new features that appear in the domain of organisms he assumes to be determined by n e w categories, not occurring at all in the Inorganic. So HARTMANN is expected to deny any more or less far-reaching crystal analogy (and, more generally, any far-reaching Inorganic-Organic analogy), which can be symbolized by :

With respect to categories determining specifically organic (but still general) functions or structures (but not with respect to the categories 'Causality', 'Simultaneous Interdependence', and other such categories), HARTMANN in fact does not hold :  , ,  , ,which means the appearance of a totally new category, determining such an organic function (or structure). |

|

As has been said, according to HARTMANN all categories of the Inorganic (as established by him) pass over into the Organic. No one of them breaks off. But in doing so they are modified, i.e. they are over-formed, as a result of the presence of the organic NOVUM. Surely, the NOVUM itself is a new category, but (in contradistinction to a new category that is the result of over-forming) one that has originated as a result of a fortuitous (and therefore irrational) fluctuation of an existing category, as explained earlier. |

|

Some -- maybe all -- organic functions can be simulated. This means that they can be 'mimicked' with inorganic means, which can be certain (laboratory-based) chemical reaction systems, but, most importantly, computers. Epistemologically, i.e. methodologically, such simulations are (called) models. The possibility of simulation is based on the assumed fact that at least the core of the organic function to be inorganically simulated exists in the Inorganic, or can be set up with exclusively inorganic means. Insofar as being set up by conscious human activity, such a simulation is a 'product' of the human mind, and belongs to the Super-psychic Layer of categories, i.e. it is a concretum whose determining categories belong to that category Layer. However, seen, all by or in itself, the product or result of such a simulation is an inorganic entity, totally determined by inorganic categories. This is so because the simulation and its product is (as has just been said), first of all, a product of a final nexus as it operates in the super-psychic Layer. But such a nexus works as follows :

We see, simulation is the reverse of over-forming, and over-forming creates true analogy. Simulation of Intelligence ? I n t e l l i g e n c e, as a concretum, belongs to the Psychic Layer of Being (i.e. the category which determines it belongs to this Layer). If intelligence can be simulated with exclusively inorganic means, for example with a computer, then its core not only must be already present in the Organic but also in the Inorganic. Genuine complete intelligence, i.e. intelligence as we know it, only occurs in the highest organisms, where the Organic is over-built, resulting in a third category Layer added on top of the Organic (which itself lies on top of the Inorganic). So, when simulating intelligence, it is assumed that intelligence, although not naturally occurring in the Inorganic (and also not in the Organic [s.str.] ), can be constructed with purely inorganic means. It is not, and therefore maybe cannot, spontaneously (be) generated by natural inorganic processes. But when it is actually constructed, say, in the form of a computer, it is, despite the detour necessarily involving -- yes -- intelligence, now a property of an inorganic being or system. When actually constructed, intelligence, from now on, occurs in the inorganic world. Surely, it is constructed by a final nexus, but, as we've said, the final nexus' core is causality. So its actual generation is causal. If we interpret "intelligence" as a case of "being-so", we can express it as an If / Then constant. The If-component then consists of a disjunctive set of sufficient grounds for intelligence (which means -- recall -- that each one of these grounds is already enough to imply the appearance of intelligence). Let us give three of such grounds :

But if every member of the whole disjunctive set of (formally) sufficient grounds is as such impossible, even in principle, then the category does not in any way exist, and is therefore invalid.

Maybe the third sufficient ground of the above list can never occur in the real World, which means that it (i.e. a series of natural causal inorganic events leading to inorganic intelligence) is a physical impossibility. And even the second sufficient ground may never occur, because an intelligent computer is a physical impossibility. If both of these (formally) sufficient grounds are physically impossible in principle, then the Inorganic category Layer does not harbor a category of intelligence (inorganism-based or machine-based intelligence). Simulation of Consciousness ? As regards the Psychic Layer, its NOVUM certainly is (or can, collectively, be called) C o n s c i o u s n e s s . Consciousness of a given being (an animal, or whatever) is an active reflection (carried out by that being) on (in the sense of a pondering about) the content of its interior world. In fact, it could be that this act of reflection is the very cause of this interior world's existence at all, i.e. its existence in such a being. So consciousness is, in a certain degree, self-referential. If such an active reflection is, in addition, be able to focus on the "its" of "the content of its interior world ", i.e. when it is completely self-referential, then this given being possesses Self-consciousness. And a given being is either constituted (or constructed) in such a way that it is able to carry out such reflections, or it is not (so constituted). There is no in-between. And it seems to be impossible (even in principle) that consciousness, let alone self-consciousness, can be simulated by means of inorganic dynamic patterns such as computers. So the Inorganic does not contain a primordial state of consciousness, nor even potentially so. Does the Organic contain such a state? This seems unlikely, because the interior world of consciousness is not spatial. And this is the reason why the Psychic Layer over-builds, instead of over-forms, the next lower Layer. And even if the category determining consciousness were the result of (just) over-forming of a lower-Layer category (in virtue of some NOVUM that has appeared in the Psychic Layer), this lower-Layer category itself is expected to be new with respect to the Inorganic Layer, that is to say it is expected to be a category only insofar as it is organically over-formed (in virtue of the organic NOVUM). So also then consciousness cannot be traced all the way down to the Inorganic, and thus cannot be simulated by inorganic means. The latter situation is symbolized in the next Figure.

We need not go further with these considerations concerning Intelligence and Consciousness, because our main topic is the Inorganic-Organic comparison, i.e. it is about the first two (lower) real-world category Layers only. The reason that we did consider Intelligence and Consciousness nevertheless, is that such a consideration throws some more light on the essence and ontological nature of simulation (which will play a major role in the Inorganic-Organic comparison). Simulation, not of organisms, but of individual organic functions or structures. In the comparison of the Inorganic with the Organic (i.e. in the ensuing 'crystal-analogy'), crystals will be compared with organisms, and simulations of organic functions and structures are being discussed. In order to see this in the appropriate light, the following consideration is important : The corresponding concretum of the o r g a n i c NOVUM can (also) be expressed as follows : Organisms are primarily unstable material configurations that are secondarily stabilized by flexible regulations ( In this way the causal nexus is over-formed, resulting in the nexus organicus). All processes, such as (chemical) assimilation, dissimulation, reproduction, death and phylogenesis (by genetic mutation and natural selection) are a necessary implication of the fact that organisms are primarily unstable structures. The fact that organisms, despite their unstable nature, nevertheless exist is due to these processes, which effect recreation at several levels and, in addition, transformation (when external conditions have changed).

Crystals, on the other hand, are relatively stable configurations (because they are lowest-energy configurations while organisms are not). They do not, therefore, need the above processes in order to remain existent at all. Especially they do not need natural selection, because crystals, taken generally, can (in contrast to organisms) be generated and maintained in a great variety of conditions. And because they are not products of a long evolution, they can easily be recreated after temporarily adverse conditions have returned to more favorable conditions again. But, as a result of them being so, crystals cannot evolve. Liquid crystals (discussed in Part XXIX Sequel-14 ) are an example of inorganic intrinsic beings that, probably, are -- like organisms -- more or less unstable.

|

Having concluded the above (long) intermezzo, we continue with our considerations about inorganic dynamical systems.

HARTMANN, 1950, pp.491, assumes the presence, already in the Inorganic, of the phenomenon, of "wholeness determination" :

There are, according to him, inorganic dynamical systems (and maybe this applies to all such systems) that not only show a determination from the elements (which generally are themselves dynamical systems) to the system (i.e. the system is determined by its elements), but also a determination from the system as a whole to its elements (i.e. certain aspects of the elements are determined by the system as a whole).

According to me this is not so, at least not in inorganic dynamical systems : Here the system is its elements. Of course the system is not identical to any single element of it, and, generally, also not to just their summation. The system wholly consists of its elements, (but having these elements) not in the form of isolated entities, but as they relate to each other, and interact with each other, such that they together form a configuration that represents a possible state of the dynamical system. So in this way a dynamical system is wholly its elements. And in this way too there is no (backwards) determination from the system to its elements, because the system is not something apart from its elements. And the elements determine the system because the system intrinsically contains these elements. The system is the immediate result of the elements. And because we must interpret "elements" as we did above, i.e. including their patterned distribution (reflecting the simultaneous interdependence) and their interaction (reflecting their causal -- and thus non-simultaneous reciprocal action) -- because only then they are true elements -- it is to be expected that the system as a whole can show features that are not present in any one of its elements, and are also not just the result of aggregation, but are the result of integration , for instance as we saw it in star-shaped snow crystals : Such a crystal, together with its growing environment, can be seen as a dynamical system, consisting of elements, in this case water molecules (H2O). When such crystals grow very fast in a uniform environment, they develop six arms. In one and the same crystal individual these arms are always of precisely the same morphological type (See Part XXIX Sequel-6 ), which points to some global communication within the snow crystal, more or less in the sense that each arm 'knows' what the others are doing (i.e. how they grow). It is clear that this phenomenon cannot be derived from the properties of water molecules as they are in themselves. This peculiar feature obviously comes from water molecules that are e l e m e n t s in a crystal lattice, and some others that are in the immediate growing environment of the given (branched) snow crystal. In short, this feature wholly comes from the elements of the dynamical system. So, as regards inorganic dynamical systems, we should not see the system as something separate from its elements, i.e. we should not consider the system as one thing, and its (set of) elements as another (which would then result in the possibility that the system acts on its elements, and which HARTMANN calls "wholeness determination" (Ganzheitsdetermination)). The latter -- HARTMANN's wholeness determination -- is, in the case of inorganic dynamical systems, just the total of external conditions within which the system is embedded. These conditions constrain the system, and make it possible (or impossible) for the system to exist there at all. If these conditions, initially sustaining the system, change, in such a way as to make prolonged existence of the system no longer possible, then the system disintegrates and its elements are taken up by larger systems.

In o r g a n i c dynamical systems, on the other hand, we can expect such a wholeness determination, because here we have to do with the presence of a categorical NOVUM : the nexus organicus.

So we can, on the basis of all this, distinguish inorganic beings (inorganic dynamical systems) from organic beings (organic dynamical systems) by the absence or presence of "wholeness determination".

Apparently teleological nature of inorganic dynamical systems

Before we proceed further, we again remind the reader that our First Part of Website ( First Series of Documents) , contains much for an up-to-date insight into the generalities of dynamical systems (involving "states", "initial conditions", "trajectories", "attractors", "attractor basins", "attractor-basin field", "dynamical law", "chaotic systems", etc.). We especially recomment the following four documents :

Terms like "aiming to equilibrium", "attractor", "having a tendency", etc. which are used in the theory of dynamical systems, are just convenient images, convenient to visualize and compactly describe complex relationships. But basically they are misleading. For here, i.e. in inorganic dynamical systems, there are no "goals" or "purposes" toward which a system would "tend to go", "aim", or (toward which a system would) "be attracted". Instead of these goals or purposes there are equilibria. But these are not preset.

Energy

Let me be honest with the reader. All the remaining part of this document tries to analyse causality further by involving thermodynamics. Now thermodynamics is a very vast and difficult body of enquiry. And I am certainly not an expert in those matters. Moreover my intellectual training (which is mainly philosophy and geometrical symmetry) does not admit to quickly catch up in thermodynamic matters. Certainly, I am more or less in possession of the main lines, but my knowledge even of these is predominantly qualitative. So in what follows the reader should not interpret my text as some sort of scientific textbook on thermodynamics. For that he or she must consult a real textbook on the subject. And because I am not technically acquainted with thermodynamics, errors (in my text) are possible. The reader should be aware of this. The only thing I have tried to do is use the thermodynamic things I know, or think I understand, for the benefit of an extended categorical analysis of causality. I hope that every amendment the reader finds necessary will be communicated to me. In this way this document (and the next) could increase in quality and consistency.

It must have striked the reader that we, when discussing Causality and Dynamical Systems, spoke little about energy, in spite of the evident fact that energy relations, energy conversions, and difference between energy levels (energy fall), must play a decisive role in Determination and Process. We will now rectify this shortcoming.

Considerations about energy are especially important in the case of dissipative systems or structures. And moreover, while some, but not all inorganic processes are dissipative, all organic dynamical systems, without exceptions, are dissipative systems. And being 'dissipative' means that these systems import matter and energy and export matter and entropy, and are therefore in a state of far-from-thermodynamic-equilibrium (and will as such be contrasted with systems that are in a state of thermodynamic equilibrium or near-equilibrium, as the latter is the case in crystallization).

Entropy, as it was just mentioned, can be described as the degree of ' leveling out ' of energetic differences, which in turn means that work can no longer be performed, despite the presence of energy.

In the next Sections of the present document we will again discuss Causality, but now how it is related to energy, and further how energy is related to the mentioned dissipative systems. The purpose of all this is to obtain still more insight into the inorganic analogues of organic pattern formation, i.e. we do not limit our discussion of these inorganic analogues to just the formation of crystals, which are thermodynamically near-equilibrium systems, but will also discuss the very important inorganic d i s s i p a t i v e systems, which are thermodynamically far-from-equilibrium systems (As such they are treated in First Part of Website, first Series of Documents : Non-living Dissipative Systems ).

Here, in the present document, we will concentrate on the general categorical elements involved in the thermodynamics of dissipative systems (as compared to non-dissipative systems), especially the types of determination (i.e. the determinations -- nexus categories -- that are involved in pattern-generating inorganic dissipative dynamical systems).

Causality, Energy, and Entropy.

This Section discusses in what way and to what extent e n e r g y is involved in causality (as we see the latter at work in dynamical systems). So in doing so it further investigates the nature of causality.

However, I must urge the reader not to take this Section too seriously, because I, unfortunately, am not an authority in Thermodynamics. So the content of this Section is only a qualitative and intuitive attempt to understand the role that energy plays in causality. Maybe the reader can improve on it, or refute it all together. Please let me hear!

Earlier we had established that causality connects, to begin with, two states : the cause and the effect. The effect is different from the cause, in the sense that it is creatively different : the (pure) effect is produced from and by the cause as something totally new, and consequently the difference of the effect is as it is, i.e. it is not intelligible.

Now, one could surmise that the difference of the effect could consist in the effect being a state of lower potential energy (as compared with the cause). And so the difference is intelligible after all. However, there exist energetically up-hill processes (for example the morphogenesis of an organism), which means that if we want to understand these processes energetically, we must include the environment from which energy is taken up by the (up-hill) process. But this implies that we must extend our process to the whole Universe, because the energetic environment of such a process is in fact not bounded. And while the total amount of energy (potential + actual energy) of the Universe stays the same (First Law of Thermodynamics), the total amount of potential energy of the Universe will decrease. So we could characterize our process as effecting a decrease of the potential energy.

This, however, can perhaps be better expressed by means of a related concept, namely that of entropy.

According to the Second Law of Thermodynamics, whatever process takes place, the total amount of entropy (of the Universe) cannot decrease, where, as has been said, "entropy" is a measure of the degree of leveling-out energy differences. We can imagine a configuration of particles that attract one another, but repel when they come very close to each other. When the particles are far apart, the configuration possesses potential energy, but when they are pressed more or less closely together, the configuration also possesses potential energy. In both cases we have to do with stress so to say, and the system wants to get rid of this stress. So when left alone, the result will be that the particles, when far apart are moving toward each other (potential energy will be converted into actual (in this case kinetic) energy). But when they come, as a result of this movement, too close to each other (potential energy being built up again) they will repel each other and thus move apart again, resulting in a configuration of equilibrium with zero potential energy. This is just a mechanical system which will settle at its equilibrium. Dissipative systems, however, do not settle at or near equilibrium, but are held far from equilibrium. And this means that it has a low entropy with respect to its surroundings. But according to the Second Law of Thermodynamics such a system would not be able to get off the ground in the first place, because a decrease of entropy is involved. This is solved by nature as follows : While the entropy in a dissipative pattern-generating dynamical system decreases (which as such is a local decrease of entropy, and a local increase of order), the netto entropy increases nevertheless (i.e. if we include the dissipative system's environment, and, if necessary, the whole Universe, the total amount of entropy always increases). So the Second law is not violated. Thus we could surmise that causality is, in all cases, necessarily linked up with entropy increase.

However, entropy increase can be accomplished in many ways. So we can understand only one single aspect of the effect (i.e. the state that follows from the cause), namely that the netto entropy change must be positive, or, equivalently the total entropy of the Universe must increase, in order for this effect to be realized. In other words, when whatever process has taken place, the total entropy of the Universe has then been increased as a result of this process. So we now have at least a partial understanding of what (kind of) effect must follow from a given cause.

We could perhaps make the production of the effect from the cause completely (instead of only partially) intelligible by assuming that the effect is necessarily that state which involves the largest increase of entropy (i.e. increase as seen from the cause). In that case the effect would be totally determined (provided there is only one single configuration -- making up the effect -- that involves the largest entropy increase), and thus totally intelligible. The decrease of entropy as a result of the generation of a dissipative patterned structure can be neutralized by a same increase of entropy of the surroundings, and of course also by a still larger increase of the entropy of the surroundings. But a largest increase would only take place when all the other energy falls everywhere in the Universe are leveled out. But although it is evident that Nature locally tends to leveling out things, because this means relaxation (accounting for the spontaneity of processes that bring about this relaxation), this tendency is perhaps not so evident at the global scale, i.e. at the scale of the Universe as a whole.

If we accept the latter, i.e. if we indeed hold that the Universe-as-a-whole does not necessarily tend to maximally relax when it is locally stressed, but that for it just a little more than cancelling out the locally originated stress is sufficient to allow for the effect to take place, and if we moreover realize that entropy increase as such can be accomplished in many different ways, then entropy increase is only a conditio sine qua non (an indispensable but not necessarily sufficient condition) for causality (to take place). All other aspects of causality, i.e. all other aspects of the production of the effect, still remain unintelligible. Given a certain cause, its effect is (only) partially conditioned (and thus only partially understood) as a result of the demand that the netto entropy change be positive, realized either directly by the system itself (for instance in the case of a falling body), or by its immediate surroundings. The latter case is the one where the (pattern-generating dissipative) system enters into a state of higher order (and thus lower entropy), implying a definite pattern, and thus differences. And these in turn mean (increase of) stress, which, more generally can mean an increase in potential energy, for example when we stretch out a spring ( In this particular example the increase in stress already takes place without the increase of order, namely just because attracting particles come to lie farther apart and in this way increasing the potential energy ). The entropy decrease, that took place within the (pattern-generating) dynamical system, generally becomes a little more than (just) cancelled in virtue of an entropy increase of the immediate surroundings of the system. And because the netto change of entropy is positive (entropy increases), the area containing the system is relaxed (as compared with its state before the pattern was generated). And this is already sufficient (for the effect to take place, where the cause is, for example, the initial state of a pattern-generating dissipative dynamical system, and the effect is the generated pattern and its surroundings where the entropy increase has taken place). The relaxation does not need to be maximal (i.e. is not extending across the whole Universe). Therefore this relaxation can be accomplished in many different ways, which implies that the specific and constant way of relaxation (the specific effect) which is produced by a particular cause in a repeatable way (same cause, same effect) is still not intelligible. Therefore causality is still inherently creative, even when energy restrictions are included.

In the next Section we will elaborate still more on the relationship between causality and entropy.

Causality and the spontaneity of entropy increase.

Entropy increase is a transition from an unstable (more) ordered configuration of material elements to a more disordered configuration. Two examples are given.

An example, taken from (near) equilibrium systems, is the following : A supersaturated solution of a given chemical compound is an unstable configuration of material elements. Its overall orderliness is higher than that of the next configuration which is : crystal and solution, i.e. the generated crystal, now situated in a (just) saturated solution (which belongs to the system). Although the crystal is more ordered than any solution, heat was given off into its surroundings, the solution. This causes an increase of the thermal agitation of the molecules in the solution, and thus an increase in entropy of that solution. And this increase must -- according to the Second Law of Thermodynamics -- be such that the netto change of the entropy is positive, i.e. the entropy of the system as a whole -- crystal + solution -- must increase (despite the local increase of order in the system : the crystal (lattice)). The same applies to the phenomenon of solidification (crystallization) of some molten material after super-cooling, making the configuration unstable : a more ordered state appears during crystallization, it is true, but heat is given off to the environment, increasing its entropy, and this environment belongs to the system.

See (for 'authorization') NOTE 4 .

An example, now taken from far-from-equilibrium systems, is the death and decomposition of an organism : The ordered configuration passes over into a disordered state, as soon as the barriers against decomposition are removed, resulting in an increase of entropy. In itself the far-from-equilibrium structure is ordered, but unstable.

The fact that entropy increase is spontaneous, can be understood, because it is equivalent to 'leveling things out', and therefore to 'relaxation'.

So it is clear that an unstable (more) ordered configuration (intrinsically unstable, because it is actively upheld or forced into the (more) ordered state) will, when all possible barriers are removed, spontaneously transform into a (more) disordered state of the system as a whole (netto increase of entropy), because in such a state everything is leveled out (at least more so than in the initial state), which means that the system-as-a-whole now finds itself in a (more) relaxed overall condition.

So it is at least intuitively evident that the system will spontaneously pass over into this more relaxed condition as soon as relevant barriers are removed (When we let go a stretched spring, it will spontaneously contract).

This spontaneous transition from an ordered state to a disordered state is sometimes explained as follows :

Because, with respect to a given set of (different) elements, there generally are many many more mathematically possible disordered configurations (of the elements of the set) than there are ordered configurations, the chance that, in the case of a re-configuration of the elements, an ordered configuration will (after the fact) be seen to have been followed by a disordered configuration is much much larger than the chance that an ordered configuration will (after the fact) be seen to have been followed by another ordered configuration, and certainly also when a disordered configuration is seen to have been followed by an ordered one.

Such an explanation would only make sense when the initial configuration or state were indeterminate as to the nature of the next configuration (state), i.e. if every mathematically possible configuration were equally possible physically. Then indeed the chance is very big that an ordered configuration (state) (or a disordered configuration for that matter) will (after the fact) be seen to have been followed by a disordered configuration (state) ( Where "followed by a disordered configuration" in fact means : " followed by one or another disordered configuration" ), because there are so many more disordered configurations (of the elements of the given set) than there are ordered ones.

There is, however, no reason to believe that any given configuration of (an intrinsic dynamical system of) material elements is indeterminate as to the nature of the next state (configuration), i.e. it is not so that, given a particular state (configuration), there is more than one next state that can emerge from this given initial state. On the contrary, we'd better assume that in all such cases causality rules over things. So the initial state (initial configuration of material elements) is the cause of the next state (next configuration), which is the effect. This next state is completely determined by the previous state (of that same dynamical system). One aspect of this "being completely determined " is that the system will necessarily relax as soon as barriers (to do so) are removed. So in our case of an unstable ordered configuration of material elements (where the barrier for (spontaneous) transformation is removed) -- which is the cause -- we will obtain a disordered configuration -- which is the effect. This is the part of the "effect being completely determined " demanded by thermodynamics. The other part of this being determined consists in the fact that from a particular (i.e. given) initial configuration (of material elements) a particular disordered configuration will follow (instead of any other disordered [or ordered for that matter] configuration), i.e. one specific (disordered) configuration out of the many that are mathematically possible. This constant relation between a particular initial ordered configuration and a particular disordered configuration (instead of some other [disordered] configuration) is the creative, genuinely productive, and therefore non-intelligible aspect of causality. In accordance with this, another particular initial configuration will give another particular configuration as its next state.

All these considerations are based on the (validity of the) Second Law of Thermodynamics (What follows is taken from a university manual written by Van MIDDELKOOP, 1971, pp.58). This Second Law can be expressed in terms of heat engines in the following way :

Q1 (heat supply to engine) = Q2 (heat fiven off to the environment) + W (work done by ideal machine).

The work done by the machine would be maximal when Q2 = 0. Then we would have Q1 = W, which means that all the imported heat were transformed in work. But this is never the case because in, say, a steam engine, the used steam always still possesses a considerable amount of heat.

Q2 is a function of T2. If T1 and T2 are absolute temperatures, then it turns out that

And if T2 = 0 (absolute zero temperature), then Q2 = 0.

Only in that case in the ideal engine (no heat leakage, no friction) all heat is transformed into mechanical work. Apart from this, there is (even in an ideal engine), after work has been done, always some heat left that must be exported.

Carnot (1796-1832) defined the ideal heat engine, which has no internal friction, and working only on the basis of a temperature difference. Schematically :

Let us now calculate the efficiency of an ideal steam engine (using the above relation Q1 / Q2 = T1 / T2).

T1 = 3730K, T2 = 2880 K, so the efficiency is : 1 - 288 / 373 = 0.23, that is to say, the efficiency of even an ideal heat engine is only 23% (!).

A real engine will, as a result of losses, have a still lower efficiency.

The carnot engine can also be reversed : By supplying mechanical work the exit temperature T1 is higher then the entrance temperature T2. Schematically :

This is the case of the refrigerator and the air-conditioner. A refrigerator cools its contents (and heats the room in which it stands), thus reversing the flow of entropy and increasing the order within the refrigerator, but only at the expense of the increasing entropy of the power station producing the electricity that drives the refrigerator motor. The entropy of the entire system, refrigerator and power source, must not decrease -- and, in practical matters, will increase ( Here there is a flow of heat from lower temperature to higher temperature, but this flow is not accomplished in a self-acting way, i.e. it is not spontaneous, it is driven.).

Now we can formulate the Second Law very concisely by means of the concept of entropy. The following discussion will result in this formulation.

The entropy S is a quantity that is only dependent on the amount of transported heat and the temperature T at which this transport takes place, and can be defined by its change :

The change of entropy dS is then equal to the amount of transported heat dQ divided by the temperature T at which this change occurs.

The Second Law of Thermodynamics now is equivalent to the following statement :

In a physical or chemical process the entropy S i n c r e a s e s, until equilibrium has been reached.

And this statement is equivalent to :

Heat only s p o n t a n e o u s l y flows from higher to lower temperatures.

This latter statement will be made clear as follows :

Consider two systems with initial temperatures T1 and T2 , where T2 > T1 (where > means : greater than, whereas < means : smaller than), and where these systems are in contact with each other. Suppose that initially S = S1 + S2 (i.e. the total entropy is the entropy of the first system plus the entropy of the second system).

Because of the temperature difference there is a flow of heat of system 2 to system 1. This means that S1 increases with an amount dS1 = dQ / T1 when there is an amount of heat dQ transported from 2 to 1.

And because system 2 loses heat we have dS2 = - dQ / T2 (i.e. dS2 is minus dQ / T2 ).

So dS ( = the change of S with respect to the total system (1 + 2)) =

dS1 + dS2 = dQ / T1 - dQ / T2 = (1 / T1 - 1 / T2)dQ. And this is a positive quantity, i.e. dS > 0, because T2 > T1 , therefore 1 / T2 < 1 / T1 and therefore 1 / T1 - 1 / T2 > 0.

Further we know that dQ > 0, so (1 / T1 - 1 / T2)dQ = dS > 0.

So the entropy increases, until T1 = T2 (equilibrium). If the heat had spontaneously flown the other way around (from something having a lower temperature to something having a higher temperature) dS would be negative and thus contradicting the Second Law.

Indeed, when T1 = T2 , 1 / T1 = 1 / T2 , so 1 / T1 - 1 / T2 = 0, and therefore

(1 / T1 - 1 / T2)dQ = 0, and thus dS = 0 (the entropy has become constant).

Entropy can be considered a measure of chaos (disorder) (Van MIDDELKOOP, G. 1971, p.58-60).

Entropy figures in the Second Law of Thermodynamics. What then is the First Law?

(In fact we should write dQ and dA with the greek letter delta, because only dU is a complete differential).

For the sum of a series of successive small quantities (increments) like these, we use integrals,

because the series of increments need not to be regular. We can also say that expressing them as integrals is a generalisation of dQ, dA, dU, etc.

If the change of the system not small, for instance when we add much heat and compress the gas strongly, then the added heat Q and the added work A will be expressed as these integrals, and we get

........................ (1)

........................ (1)where 1 and 2 respectively indicate the initial state and the end state, and where the sum of the small energy changes

is the energy difference between the end state and initial state.